Musical Instrument Digital Interface

From Wikipedia, the free encyclopedia

| This article needs additional citations for verification. Please help improve this article by adding reliable references (ideally, using inline citations). Unsourced material may be challenged and removed. (April 2008) |

MIDI (Musical Instrument Digital Interface, IPA: /ˈmɪdi/) is an industry-standard protocol defined in 1982[1] that enables electronic musical instruments such as keyboard controllers, computers, and other electronic equipment to communicate, control, and synchronize with each other. MIDI allows computers, synthesizers, MIDI controllers, sound cards, samplers and drum machines to control one another, and to exchange system data (acting as a raw data encapsulation method for sysex commands). MIDI does not transmit an audio signal or media — it transmits "event messages" such as the pitch and intensity of musical notes to play, control signals for parameters such as volume, vibrato and panning, cues, and clock signals to set the tempo. As an electronic protocol, it is notable for its widespread adoption throughout the industry.

All MIDI compatible controllers, musical instruments, and MIDI-compatible software follow the same MIDI 1.0 specification, and thus interpret any given MIDI message the same way, and so can communicate with and understand each other. MIDI composition and arrangement takes advantage of MIDI 1.0 and General MIDI (GM) technology to allow musical data files to be shared among many different files due to some incompatibility with various electronic instruments by using a standard, portable set of commands and parameters. Because the music is simply data rather than recorded audio waveforms, the data size of the files is quite small by comparison.

Contents |

[edit] Interfaces

The physical MIDI interface uses DIN 5/180° connectors. Opto-isolating connections are used, to prevent ground loops occurring among connected MIDI devices. Logically, MIDI is based on a ring network topology, with a transceiver inside each device. The transceivers physically and logically separate the input and output lines, meaning that MIDI messages received by a device in the network not intended for that device will be re-transmitted on the output line (MIDI-OUT). This introduces a delay, one that is long enough to become audible on larger MIDI rings.

MIDI-THRU ports started to be added to MIDI-compatible equipment soon after the introduction of MIDI, in order to improve performance. The MIDI-THRU port avoids the aforementioned retransmission delay by linking the MIDI-THRU port to the MIDI-IN socket almost directly. The difference between the MIDI-OUT and MIDI-THRU ports is that data coming from the MIDI-OUT port has been generated on the device containing that port. Data that comes out of a device's MIDI-THRU port, however, is an exact duplicate of the data received at the MIDI-IN port.

Such chaining together of instruments via MIDI-THRU ports is unnecessary with the use of MIDI "patch bay," "mult" or "Thru" modules consisting of a MIDI-IN connector and multiple MIDI-OUT connectors to which multiple instruments are connected. Some equipment has the ability to merge MIDI messages into one stream, but this is a specialized function and is not universal to all equipment. MIDI Thru Boxes clean up any skewing of MIDI data bits that might occur at the input stage. MIDI Merger boxes merge all MIDI messages appearing at either of its two inputs to its output, which allows a musician to plug in several MIDI controllers (e.g., two musical keyboards and a pedal keyboard) to a single synth voice device such as an EMU or Proteus.

All MIDI compatible instruments have a built-in MIDI interface. Some computers' sound cards have a built-in MIDI Interface, whereas others require an external MIDI Interface which is connected to the computer via the newer D-subminiatureDA-15 game port, a USB connector or by FireWire or ethernet. MIDI connectors are defined by the MIDI interface standard. In the 2000s, as computer equipment increasingly used USB connectors, companies began making USB-to-MIDI audio interfaces which can transfer MIDI channels to USB-equipped Windows or Mac computers. As well, due to the increasing use of computers for music-making and composition, some MIDI keyboard controllers were equipped with USB jacks, so that they can be plugged into computers that are running "software synths" or other music software.

[edit] Controllers

In popular parlance, piano-style musical keyboards are called "keyboards", regardless of their functions or type. Amongst MIDI enthusiasts, however, keyboards and other devices used to trigger musical sounds are called "controllers", because with most MIDI set-ups, the keyboard or other device does not make any sounds by itself. MIDI controllers need to be connected to a voice bank or sound module in order to produce musical tones or sounds; the keyboard or other device is "controlling" the voice bank or sound module by acting as a trigger. The most common MIDI controller is the piano-style keyboard, either with weighted or semi-weighted keys, or with unweighted synth-style keys. Keyboard-style MIDI controllers are sold with as few as 25 keys (2 octaves), with larger models such as 49 keys, 61 keys, or even the full 88 keys being available.

MIDI controllers are also available in a range of other forms, such as electronic drum triggers; pedal keyboards that are played with the feet (e.g., with an organ); EWI wind controllers for performing saxophone-style music; and MIDI guitar synthesizer controllers. EWI, which stands for Electronic Wind Instrument, is designed for performers who want to play saxophone, clarinet, oboe, bassoon, and other wind instrument sounds with a synthesizer module. When wind instruments are played using a MIDI keyboard, it is hard to reproduce the expressive control found on wind instruments that can be generated with the wind pressure and embouchure. The EWI has an air-pressure level sensor and bite sensor in the mouthpiece, 13 touch sensors arrayed along the side of the controller, in a similar location to where sax keys are placed, and touch sensors for octaves and bends.

Pad controller are used by musicians and DJs who are making music with sampled sounds or short samples of music. Pad controllers often have banks of assignable pads and assignable faders and knobs for transmitting MIDI data or changes; the better-quality models are velocity-sensitive. More rarely, some performers use more specialized MIDI controllers, such as triggers that are affixed to their clothing or stage items (e.g., magicians Penn and Teller's stage show). A MIDI footcontroller is pedalboard-style device with rows of footswitches that control banks of presets, MIDI program change commands and send MIDI note numbers (some also do MIDI merges). Another specialized type of controller is the drawbar controller; it is designed for Hammond organ players who have MIDI-equipped organ voice modules. The drawbar controller provides the keyboard player with many of the controls which are found on a vintage 1940s or 1950s Hammond organ, including harmonic drawbars, a rotating speaker speed control switch, vibrato and chorus knobs, and percussion and overdrive controls. As with all controllers, the drawbar controller does not produce any sounds by itself; it only controls a voice module or software sound device.

While most controllers do not produce sounds, there are some exceptions. Some controller keyboards called "performance controllers" have MIDI-assignable keys, sliders, and knobs, which allow the controller to be used with a range of software synthesizers or voice modules; yet at the same time, the controller also has an internal voice module which supplies keyboard instrument sounds (piano, electric piano, clavichord), sampled or synthesized voices (strings, woodwinds), and Digital Signal Processing (distortion, compression, flanging, etc). These controller keyboards are designed to allow the performer to choose between the internal voices or external modules.

[edit] Messages

All MIDI compatible controllers, musical instruments, and MIDI-compatible software follow the same MIDI 1.0 specification, and thus interpret any given MIDI message the same way, and so can communicate with and understand each other. For example, if a note is played on a MIDI controller, it will sound at the right pitch on any MIDI instrument whose MIDI In connector is connected to the controller's MIDI Out connector.

When a musical performance is played on a MIDI instrument (or controller) it transmits MIDI channel messages from its MIDI Out connector. A typical MIDI channel message sequence corresponding to a key being struck and released on a keyboard is:

- The user presses the middle C key with a specific velocity (which is usually translated into the volume of the note but can also be used by the synthesiser to set characteristics of the timbre as well). ---> The instrument sends one Note-On message.

- The user changes the pressure applied on the key while holding it down - a technique called Aftertouch (can be repeated, optional). ---> The instrument sends one or more Aftertouch messages.

- The user releases the middle C key, again with the possibility of velocity of release controlling some parameters. ---> The instrument sends one Note-Off message.

Note-On, Aftertouch, and Note-Off are all channel messages. For the Note-On and Note-Off messages, the MIDI specification defines a number (from 0–127) for every possible note pitch (C, C♯, D etc.), and this number is included in the message.

Other performance parameters can be transmitted with channel messages, too. For example, if the user turns the pitch wheel on the instrument, that gesture is transmitted over MIDI using a series of Pitch Bend messages (also a channel message). The musical instrument generates the messages autonomously; all the musician has to do is play the notes (or make some other gesture that produces MIDI messages). This consistent, automated abstraction of the musical gesture could be considered the core of the MIDI standard.

[edit] Composition

MIDI composition and arrangement takes advantage of MIDI 1.0 and General MIDI (GM) technology to allow musical data files to be shared among various electronic instruments by using a standard, portable set of commands and parameters. Because the music is simply data rather than recorded audio waveforms, the data size of the files is quite small by comparison. Several computer programs allow manipulation of the musical data such that composing for an entire orchestra of synthesized instrument sounds is possible. The data can be saved as a Standard MIDI File (SMF), digitally distributed, and then reproduced by any computer or electronic instrument that also adheres to the same MIDI, GM, and SMF standards. There are many websites offering downloads of popular songs as well as classical music in SMF and GM form, and there are also websites where MIDI composers can share their works in that same format.

Many people believe that the Standard MIDI File as a music distribution format used to be much more attractive to computer users before broadband internet became available to "the masses", due to its small file size. Also, the advent of high quality audio compression such as the MP3 format has decreased the relative size advantages of MIDI music to some degree, though MP3 is still much larger than SMF.

[edit] File formats

[edit] Standard MIDI File (SMF) Format

MIDI messages (along with timing information) can be collected and stored in a computer file system, in what is commonly called a MIDI file, or more formally, a Standard MIDI File (SMF). The SMF specification was developed by, and is maintained by, the MIDI Manufacturers Association (MMA). MIDI files are typically created using computer-based sequencing software (or sometimes a hardware-based MIDI instrument or workstation) that organizes MIDI messages into one or more parallel "tracks" for independent recording and editing. In most sequencers, each track is assigned to a specific MIDI channel and/or a specific instrument patch; if the attached music synthesizer has a known instrument palette (for example because it conforms to the General MIDI standard), then the instrument for each track may be selected by name. Although most current MIDI sequencer software uses proprietary "session file" formats rather than SMF, almost all sequencers provide export or "Save As..." support for the SMF format.

An SMF consists of one header chunk and one or more track chunks. There exist three different SMF formats; the format of a given SMF is specified in its file header. A Format 0 file contains a single track and represents a single song performance. Format 1 may contain any number of tracks, enabling preservation of the sequencer track structure, and also represents a single song performance. Format 2 may have any number of tracks, each representing a separate song performance. Sequencers do not commonly support Format 2. Large collections of SMFs can be found on the web, most commonly with the extension .mid. These files are most frequently authored with the (rather dubious) assumption that they will be only ever be played on General MIDI players.

[edit] MIDI Karaoke File (.KAR) Format

MIDI-Karaoke (which uses the ".kar" file extension) files are an "unofficial" extension of MIDI files, used to add synchronized lyrics to standard MIDI files. SMF players play the music as they would a .mid file but do not display these lyrics unless they have specific support for .kar messages. These often display the lyrics synchronized with the music in "follow-the-bouncing-ball" fashion, essentially turning any PC into a karaoke machine. None of the MIDI-Karaoke file formats are maintained by any standardization body.

[edit] XMF File Formats

The MMA has also defined (and AMEI has approved) a new family of file formats, XMF (eXtensible Music File), some of which package SMF chunks with instrument data in DLS format (Downloadable Sounds, also an MMA/AMEI specification), to much the same effect as the MOD file format. The XMF container is a binary format (not XML-based, although the file extensions are similar). See the main article Extensible Music Format (XMF).

[edit] RIFF-RMID File Format

On Microsoft Windows, the system itself uses proprietary RIFF-based MIDI files with the ".rmi" extension. Note, Standard MIDI Files are not RIFF-compliant. A RIFF-RMID file, however, is simply a Standard MIDI File wrapped in a RIFF chunk. By extracting the data part of the RIFF-RMID chunk, the result will be a regular Standard MIDI File. RIFF-RMID is not an official MMA/AMEI MIDI standard.

[edit] Extended RMID File Format

In recommended practice RP-29 ([1]), the MMA defined a method for bundling one Standard MIDI file (SMF) image with one Downloadable Sounds (DLS) image using the RIFF container technology. However, this method was deprecated when the MMA introduced the Extensible Music Format (XMF), which because of its many additional features is generally preferred for MIDI-related resource bundling purposes going forward.

[edit] Extended MIDI File (.XMI) Format

The XMI format is a proprietary extension of the SMF format introduced by the Miles Sound System, a middleware driver library targeted at PC games. XMI is not an official MMA/AMEI MIDI standard.

[edit] Usage and applications

[edit] Extensions of the MIDI standard

Many extensions of the original official MIDI 1.0 spec have been standardized by MMA/AMEI. Only a few of them are described here; for more comprehensive information, see the MMA web site.

[edit] General MIDI

The General MIDI Level 1 ("GM") standard is an important feature for MIDI content interoperability across multiple players. It addresses the indeterminacy of the basic MIDI 1.0 protocol standard regarding the meaning of Program Change and Control Change messages and other synthesizer features, in the sense that without GM different synthesizers can, and actually do, sound completely different in response to the same MIDI messages. Without GM, synthesizers can also require different Control Numbers (in Control Change messages) to produce similar responses.

The GM standard mandates:

- An assignment of specific instruments to each Program Number in Program Change messages (for example, Program Number 3 is "Grand Piano")

- The mapping of several controller numbers to important effects

- Use of channel 10 for percussion only (a specific unpitched percussion sound in place of each note)

- And various minimum specifications

General MIDI 1 was introduced in 1991.

[edit] GM Common Misconceptions

Although the GM and GM2 specifications are dependent on the basic MIDI 1.0 protocol specification, they are separate standards from MIDI 1.0. As a result, MIDI products may legitimately implement MIDI 1.0 but not GM and/or GM2. Although GM is an important feature for MIDI content interoperability across multiple players, many important MIDI applications do not require such interoperability. For example, MIDI and the SMF format are used in professional music recording production where the MIDI file content will never be distributed and custom or specialized synthesizers are used much more commonly than GM or GM2. As a direct consequence, not all SMF content is authored for GM or GM2 synthesizers. Because playing any SMF or MIDI message stream on a different synthesizer(s) than originally intended risks the generation of unintended and incorrect sounds, it is not generally safe to merely assume that any given MIDI message stream or MIDI file is intended for GM or GM2 synthesizers. In particular it is frequently assumed, incorrectly, that all or nearly all SMF content necessarily relies on the player using a GM or GM2 synthesizer, however because there is no such dependency in the actual MMA/AMEI specifications and it is also quite legitimate for SMF content to be written for non-GM synthesizers, this assumption is not reliable.

Therefore, MIDI users should note well the following caveats:

- Not all MIDI synthesizers support GM and/or GM2, and it is inadvisable to assume that they do.

- Not all SMFs are intended for GM and/or GM2 synthesizers, and it is inadvisable to assume that they are.

- Always test MIDI content for compatibility with the intended playback synthesizer before any public performance.

Unfortunately, there is currently no technical standard for indicating in advance what kind of synthesizer(s) a given SMF or MIDI message stream is intended to drive (with the exception of RTP MIDI and the audio/sp-midi MIME type definition).

[edit] GS and XG

To improve upon the General MIDI Standard and take advantage of the advancements in newer synthesizers, both Roland (GS) and Yamaha (XG) introduced proprietary specifications and numerous products with stricter requirements, new features, and backward compatibility with the GM specification. GS and XG are not compatible with each other, are not official MMA/AMEI MIDI standards, and adoption of each has been generally limited to the respective manufacturer.

[edit] General MIDI Level 2

Later, companies in Japan's Association of Musical Electronics Industry (sic) (AMEI) developed General MIDI Level 2 (GM2), incorporating and harmonizing aspects of the Yamaha XG and Roland GS formats, further extending the instrument palette, specifying more message responses in detail, and defining new messages for custom tuning scales and other new functionality. The GM2 specs are maintained and published by the MMA and AMEI. General MIDI 2 was introduced in 1999 and is commonly implemented in some newer synthesizers.

[edit] SP-MIDI

Later still, GM2 became the basis of the instrument selection mechanism in Scalable Polyphony MIDI (SP-MIDI), a MIDI variant for mobile applications where different players may have different numbers of musical voices. SP-MIDI is a component of the 3GPP mobile phone terminal multimedia architecture, starting from release 5.

GM, GM2, and SP-MIDI are also the basis for selecting player-provided instruments in several of the MMA/AMEI XMF file formats (XMF Type 0, Type 1, and Mobile XMF), which allow extending the instrument palette with custom instruments in the Downloadable Sound (DLS) formats, addressing another major GM shortcoming.

[edit] Alternative Tunings

By convention, most MIDI synthesizers generally default to the conventional Western 12-pitch-per-octave, equal-temperament tuning system. Unfortunately, this tuning system makes many types of music inaccessible, because they depend on different intonation systems. To address this issue in a standardized manner, in 1992 the MMA ratified the MIDI Tuning Standard, or MTS. Instruments that support the MTS standard can be tuned to any desired tuning system by sending the MTS System Exclusive message (a Non-Real Time Sys Ex).

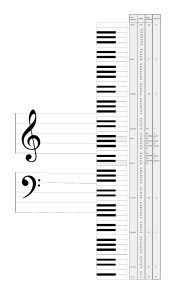

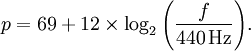

The MTS SysEx message uses a three-byte number format to specify a pitch in logarithmic form. This pitch number can be thought of as a three-digit number in base 128. To find the value of the pitch number p that encodes a given frequency f, use the following formula:

For a note in A440 equal temperament, this formula delivers the standard MIDI note number as used in the Note On and Note Off messages. Any other frequencies fill the space evenly. While support for MTS is at present not particularly widespread in commercial hardware instruments, it is nonetheless supported by some instruments and software, for example the free software programs TiMidity and Scala, as well as other microtuners.

[edit] MIDI Show Control

The MIDI Show Control (MSC) protocol (in the Real Time System Exclusive subset) is an industry standard ratified by the MIDI Manufacturers Association in 1991 which allows all types of media control devices to talk with each other and with computers to perform show control functions in live and canned entertainment applications. Just like musical MIDI (above), MSC does not transmit the actual show media — it simply transmits digital data providing information such as the type, timing and numbering of technical cues called during a multimedia or live theatre performance.

[edit] Console Automation

Audio mixers can be controlled with MIDI during console automation.

[edit] Alternate Hardware Transports

In addition to the original 31.25 kBaud current-loop, 5-pin DIN transport, transmission of MIDI streams over USB, IEEE 1394 a.k.a FireWire, and Ethernet is now common (see below).

[edit] Over Ethernet

Compared to USB or FireWire, the Ethernet implementation of MIDI provides network routing capabilities, which are extremely useful in studio or stage environments (USB and FireWire are restricted to connections between one computer and some devices and do not provide any routing capabilities). Ethernet is moreover capable of providing the high-bandwidth channel that earlier alternatives to MIDI (such as ZIPI) were intended to bring.

After the initial fight between different protocols (IEEE-P1639, MIDI-LAN, IETF RTP-MIDI), it appears that IETF's RTP MIDI specification for transport of MIDI streams over Ethernet and Internet is now spreading faster and faster since more and more manufacturers are integrating RTP-MIDI in their products (Apple, CME, Kiss-Box, etc...). Mac OS X, Windows and Linux drivers are also available to make RTP MIDI devices appear as standard MIDI devices within these operating systems. IEEE-P1639 is now a dead project. The other proprietary MIDI/IP protocols are slowly disappearing one after the other, since most of them require expensive licensing to be implemented (while RTP MIDI is completely opened) or the MIDI implementation does not bring any real advantage (apart from speed) over original MIDI protocol.

[edit] RTP-MIDI Transport Protocol

The RTP-MIDI protocol has been officially released in public domain by IETF in December 2006 (IETF RFC4695).[2] RTP-MIDI relies on the well-known RTP (Real Time Protocol) layer (most often running over UDP, but compatible with TCP also), widely used for real-time audio and video streaming over networks. The RTP layer is easy to implement and requires very little power from the microprocessor, while providing very useful information to the receiver (network latency, dropped packet detection, reordered packets, etc.). RTP-MIDI defines a specific payload type, that allows the receiver to identify MIDI streams.

RTP-MIDI does not alter the MIDI messages in any way (all messages defined in the MIDI norm are transported transparently over the network), but it adds additional features such as timestamping and sysex fragmentation. RTP-MIDI also adds a powerful 'journalling' mechanism that allows the receiver to detect and correct dropped MIDI messages.The first part of RTP-MIDI specification is mandatory for implementors and describes how MIDI messages are encapsulated within the RTP telegram. It also describes how the journalling system works. The journalling system is not mandatory (journalling is not very useful for LAN applications, but it is very important for WAN applications).

The second part of RTP-MIDI specification describes the session control mechanisms that allow multiple stations to synchronize across the network to exchange RTP-MIDI telegrams. This part is informational only, and it is not required.

RTP-MIDI is included in Apple's Mac OS X, as standard MIDI ports (the RTP-MIDI ports appear in Macintosh applications as any other USB or FireWire port. Thus, any MIDI application running on Mac OS X is able to use the RTP-MIDI capabilities in a transparent way). However, Apple's developers considered the session control protocol described in IETF's specification to be too complex, and they created their own session control protocol. Since the session protocol uses a UDP port different from the main RTP-MIDI stream port, the two protocols do not interfere (so the RTP-MIDI implementation in Mac OS X fully complies to the IETF specification).

Apple's implementation has been used as reference by other MIDI manufacturers. A Windows XP RTP-MIDI driver[3] for their own products only has been released by the Dutch company Kiss-Box and a Linux implementation is currently under development by the Grame association.[4] So it seems probable that the Apple's implementation will become the "de-facto" standard (and could even become the MMA reference implementation).

[edit] Other applications

MIDI 1.0 is also used as a control protocol in applications other than music, including:

- show control

- theatre lighting

- special effects

- sound design

- VJ-ing

- recording system synchronization

- audio processor control

- computer networking, as demonstrated by the early first-person shooter game MIDI Maze, 1987

- animatronic figure control

- animation parameter control, as demonstrated by Apple Motion v2

Such non-musical applications of the MIDI 1.0 protocol (sometimes over MIDI-DIN, sometimes using other transports) are possible because of its general-purpose nature. Any device built with a standard MIDI Out connector should in theory be able to control any other device with a MIDI In port, just as long as the developers of both devices have the same understanding about the semantic meaning of all the MIDI messages the sending device emits. This agreement can come either because both follow the official MIDI standard specifications, or else in the case of any non-standard functionality, because the message meanings are directly agreed upon by the two manufacturers.

[edit] Beyond MIDI 1.0

Although traditional MIDI connections work well for most purposes, a number of newer message protocols and hardware transports have been proposed over the years to try to take the idea to the next level. Some of the more notable efforts include:

[edit] OSC

The Open Sound Control (OSC) protocol was developed at CNMAT. OSC has been implemented in the well-known software synthesizer Reaktor and in other innovative projects including SuperCollider, Pure Data, Isadora, Max/MSP, Csound, vvvv and ChucK. The Lemur Input Device, a customizable touch panel with MIDI controller-type functions, also uses OSC. OSC differs from MIDI 1.0 over traditional 5-pin DIN in that it can run at broadband speeds when sent over Ethernet connections, however the differences are smaller compared to MIDI is also running at broadband speeds over Ethernet connections. Unfortunately few mainstream musical applications and no standalone instruments support the protocol so far, making whole-studio interoperability problematic. OSC is not owned by any private company, however it is also not maintained by any standards organization. Since September 2007, there is a proposal for a common namespace within OSC for communication between and controllers, synthesizers and hosts, however this too would not be maintained by any standards organization.

[edit] mLAN

Yamaha has its mLAN[2] protocol, which is based on the IEEE 1394 transport (also known as FireWire) and carries multiple MIDI 1.0 message channels and multiple audio channels. mLAN is not maintained by a standards organization as it is a proprietary protocol. mLAN is open for licensing, although covered by patents owned by Yamaha.

[edit] HD Protocol

Development of a version of MIDI for new products which is fully backward compatible is now under discussion in the MMA. Tentatively called "HD Protocol", this new standard would support modern high-speed transports, provide greater range and/or resolution in data values, increase the number of Channels, and support the future introduction of entirely new kinds of messages. Representatives from all sizes and types of companies are involved, from the smallest speciality show control operations to the largest musical equipment manufacturers. No technical details or projected completion dates have been announced.[5][6] Various transports have been proposed for use for the HD-Protocol physical layer, including a call for ACN to be used as the sole or primary transport in show control environments.

[edit] MIDI software

[edit] Example Standard MIDI files

[edit] See also

- List of MIDI editors and sequencers

- Comparison of MIDI standards

- Commons Portal about MIDI (under construction).

- Karaoke and midi *.kar files.

- LRC (file format)

- The MIDI 1.0 Protocol

- MIDI Machine Control

- MIDI Show Control

- MIDI timecode

- MIDI controller

- MIDI mockup

- MIDI usage and applications

- Midiboard

- Module file

- Multitrack recording

- Music sequencer

- Sound design

- Show control

- Tracker

- Soundfonts

[edit] References

- ^ "midi-standards-a-brief-history-and-explanation". http://mustech.net/2006/09/15/midi-standards-a-brief-history-and-explanation.

- ^ IETF RTP-MIDI specification

- ^ Windows XP RTP-MIDI driver download

- ^ Grame's website

- ^ MMA Hosts HD-MIDI Discussion, MIDI Manufacturers Association.

- ^ Finally: MIDI 2.0, O'Reilly Digital Media Blog.

[edit] External links

[edit] Official MIDI Standards Organizations

- MIDI Manufacturers Association (MMA) – Source for English-language MIDI specs

- Association of Musical Electronics Industry (AMEI) – Source for Japanese-language MIDI specs

[edit] Unofficial Sources

- The original MIDI portal for the web - unfortunately hijacked. Last original content according to archive.org: Oct, 4th 2006

- A guide for composers using MIDI software, technical information about MIDI

- Hinton Instruments' MIDI Protocol Guide

- Hinton Instruments' Professional MIDI Guide

- midi files download

- The MIDI Show Control (MSC) standard

- TWEAKHEADZ Labs Introduction to Midi

- How MIDI Works

- MIDI Cable Length limitations

- Scheme of PC MIDI cable

- MIDI controllers come in all shapes and sizes. Music Tech author Keith Gemmell explains how they work.

- Songstuff Midi - Midi Message Format

- Crash Course in MIDI format

- WikiAudio's MIDI simplified specification chart

[edit] Other resources

- Disklavier World Public Domain MIDI-music in FIL (e-SEQ format) for YAMAHA Disklavier pianos ~ live performances!

- Virtual MIDI Machine - VMM is a c-like multithreading language that allows a composer to write low-level MIDI algorithms

- MIDI keyboard, frequencies, note names, numbers and Note systems

- midi troubleshooting

- MIDI Tutorials, Guides, Tunings, Examples, MIDI Samples and Latest News

- MIDI Electronic Circuits

- Thousands of MIDI files on BlueMan Web Site : French Variety (some with karaoké), International Success, Movies and TV, Jazz, Classic, XG Creation