Sequent calculus

From Wikipedia, the free encyclopedia

In proof theory and mathematical logic, the sequent calculus is a widely known proof calculus for first-order logic (and propositional logic as a special case of it). The term "sequent calculus" applies both to a family of formal systems sharing a certain style of formal inference, and to its individual members, of which the first, and best known, is known under the name LK, distinguishing it from other systems in the family, such as LJ. Another term for such systems in general is Gentzen systems.

The sequent calculus LK was introduced by Gerhard Gentzen as a tool for studying natural deduction in 1934. It has turned out to be a very useful calculus for constructing logical derivations. The name itself is derived from the German term Logischer Kalkül, meaning "logical calculus." Sequent calculi and the general concepts relating to them are used widely in the whole field of proof theory and mathematical logic.

Contents |

[edit] Introduction

One way to classify different styles of deduction systems is to look at the form of judgments in the system, i.e., which things may appear as the conclusion of a (sub)proof. The simplest judgment form is used in Hilbert-style deduction systems, where a judgment has the form

where B is any formula of first-order-logic (or whatever logic the deduction system applies to, e.g, propositional calculus or a higher-order logic or a modal logic). The theorems are those formulae that appear as the concluding judgment in a valid proof. A Hilbert-style system needs no distinction between formulae and judgments; we make one here solely for comparison with the cases that follow.

The price paid for the simple syntax of a Hilbert-style system is that complete formal proofs tend to get extremely long. Concrete arguments about proofs in such a system almost always appeal to the deduction theorem. This leads to the idea of including the deduction theorem as a formal rule in the system, which happens in natural deduction. In natural deduction, judgments have the shape

where the Ai's and B are again formulae and  . In words, a judgment consists of a list (possibly empty) of formulae on the left-hand side of a turnstile symbol "

. In words, a judgment consists of a list (possibly empty) of formulae on the left-hand side of a turnstile symbol " ", with a single formula on the right-hand side. The theorems are those formulae B such that

", with a single formula on the right-hand side. The theorems are those formulae B such that  (with an empty left-hand side) is the conclusion of a valid proof. (In some presentations of natural deduction, the Ai's and the turnstile are not written down explicitly; instead a two-dimensional notation from which they can be inferred is used).

(with an empty left-hand side) is the conclusion of a valid proof. (In some presentations of natural deduction, the Ai's and the turnstile are not written down explicitly; instead a two-dimensional notation from which they can be inferred is used).

The standard semantics of a judgment in natural deduction is that it asserts that whenever[1] A1, A2, etc., are all true, B will also be true. The judgments

are equivalent in the strong sense that a proof of either one may be extended to a proof of the other.

Finally, sequent calculus generalizes the form of a natural-deduction judgment to

a syntactic object called a sequent. Again, Ai and Bi are formulae, and n and k are nonnegative integers, that is, the left-hand-side or the right-hand-side (or neither or both) may be empty. As in natural deduction, theorems are those B where  is the conclusion of a valid proof.

is the conclusion of a valid proof.

The standard semantics of a sequent is an assertion that whenever all of the Ai's is true, at least one of the Bi will also be true. One way to express this is that a comma to the left of the turnstile should be thought of as an "and", and a comma to the right of the turnstile should be thought of as an (inclusive) "or". The sequents

are equivalent in the strong sense that a proof of either one may be extended to a proof of the other.

At first sight, this extension of the judgment form may appear to be a strange complication — it is not motivated by an obvious shortcoming of natural deduction, and it is initially confusing that the comma seems to mean entirely different things on the two sides of the turnstile. However, in a classical context the semantics of the sequent can also (by propositional tautology) be expressed as

In this formulation, the only difference between formulae on either side of the turnstile is that those on the left are negated. Thus, swapping left for right in a sequent corresponds to negating all of the constituent formulae. This means that a symmetry such as De Morgan's laws, which manifests itself as logical negation on the semantic level, translates directly into a left-right symmetry of sequents — and indeed, the inference rules in sequent calculus for dealing with conjunction (∧) are mirror images of those dealing with disjunction (∨).

Many logicians feel that this symmetric presentation offers a deeper insight in the structure of the logic than other styles of proof system, where the classical duality of negation is not as apparent in the rules.

[edit] The system LK

This section introduces the rules of the sequent calculus LK, as introduced by Gentzen in 1934. [2] A (formal) proof in this calculus is a sequence of sequents, where each of the sequents is derivable from sequents appearing earlier in the sequence by using one of the rules below.

[edit] Inference rules

The following notation will be used:

known as the turnstile, separates the assumptions on the left from the propositions on the right

known as the turnstile, separates the assumptions on the left from the propositions on the right- A and B denote formulae of first-order predicate logic (one may also restrict this to propositional logic),

- Γ,Δ,Σ, and Π are finite (possibly empty) sequences of formulae, called contexts,

- when on the left of the

, the sequence of formulas is considered conjunctively (all assumed to hold at the same time),

, the sequence of formulas is considered conjunctively (all assumed to hold at the same time), - while on the right of the

, the sequence of formulas is considered disjunctively (at least one of the formulas must hold for any assignment of variables),

, the sequence of formulas is considered disjunctively (at least one of the formulas must hold for any assignment of variables),

- when on the left of the

- t denotes an arbitrary term,

- A[t] denotes a formula A, in which some occurrences of a term t are of interest

- A[s / t] denotes the formula that is obtained by substituting the term s for the specified occurrences of t in A[t],

- x and y denote variables,

- a variable is said to occur free within a formula if its only occurrences in the formula are not within the scope of quantifiers

or

or  .

. - WL and WR stand for Weakening Left/Right, CL and CR for Contraction, and PL and PR for Permutation.

| Axiom: | Cut: |

|

|

|

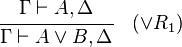

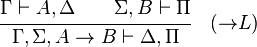

| Left logical rules: | Right logical rules: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Left structural rules: | Right structural rules: |

|

|

|

|

|

|

|

|

|

Restrictions: In the rules (∀R) and (∃L), the variable y must not be free within Γ, A[x/y], or Δ.

[edit] An intuitive explanation

The above rules can be divided into two major groups: logical and structural ones. Each of the logical rules introduces a new logical formula either on the left or on the right of the turnstile  . In contrast, the structural rules operate on the structure of the sequents, ignoring the exact shape of the formulae. The two exceptions to this general scheme are the axiom of identity (I) and the rule of (Cut).

. In contrast, the structural rules operate on the structure of the sequents, ignoring the exact shape of the formulae. The two exceptions to this general scheme are the axiom of identity (I) and the rule of (Cut).

Although stated in a formal way, the above rules allow for a very intuitive reading in terms of classical logic. Consider, for example, the rule (∧L1). It says that, whenever one can prove that Δ can be concluded from some sequence of formulae that contain A, then one can also conclude Δ from the (stronger) assumption, that A∧B holds. Likewise, the rule (¬R) states that, if Γ and A suffice to conclude Δ, then from Γ alone one can either still conclude Δ or A must be false, i.e. ¬A holds. All the rules can be interpreted in this way.

For an intuition about the quantifier rules, consider the rule (∀R). Of course concluding that ∀x A[x/y] holds just from the fact that A[y] is true is not in general possible. If, however, the variable y is not mentioned elsewhere (i.e. it can still be chosen freely, without influencing the other formulae), then one may assume, that A[y] holds for any value of y. The other rules should then be pretty straightforward.

Instead of viewing the rules as descriptions for legal derivations in predicate logic, one may also consider them as instructions for the construction of a proof for a given statement. In this case the rules can be read bottom-up. For example, (∧R) says that, in order to prove that A∧B follows from the assumptions Γ and Σ, it suffices to prove that A can be concluded from Γ and B can be concluded from Σ, respectively. Note that, given some antecedent, it is not clear how this is to be split into Γ and Σ. However, there are only finitely many possibilities to be checked since the antecedent by assumption is finite. This also illustrates how proof theory can be viewed as operating on proofs in a combinatorial fashion: given proofs for both A and B, one can construct a proof for A∧B.

When looking for some proof, most of the rules offer more or less direct recipes of how to do this. The rule of cut is different: It states that, when a formula A can be concluded and this formula may also serve as a premise for concluding other statements, then the formula A can be "cut out" and the respective derivations are joined. When constructing a proof bottom-up, this creates the problem of guessing A (since it does not appear at all below). This issue is addressed in the theorem of cut-elimination.

The second rule that is somewhat special is the axiom of identity (I). The intuitive reading of this is obvious: A proves A.

Observe that all rules have mirror companions, except the ones for implication. This reflects the fact that the usual language of first-order logic does not include the "is not implied by" connective  that would be the De Morgan dual of implication. Adding such a connective with its natural rules would make the calculus completely left-right symmetric.

that would be the De Morgan dual of implication. Adding such a connective with its natural rules would make the calculus completely left-right symmetric.

[edit] An example derivation

As for an example, this is the sequential derivation of  (A ∨ ¬A), known as the Law of excluded middle (tertium non datur in Latin).

(A ∨ ¬A), known as the Law of excluded middle (tertium non datur in Latin).

This derivation also emphasizes the strictly formal structure of a syntactic calculus. For example, the right logical rules as defined above always act on the first formula of the right sequent, such that the application of (PR) is formally required. This very rigid reasoning may at first be difficult to understand, but it forms the very core of the difference between syntax and semantics in formal logics. Although we know that we mean the same with the formulae A ∨ ¬A and ¬A ∨ A, a derivation of the latter would not be equivalent to the one that is given above. However, one can make syntactic reasoning more convenient by introducing lemmas, i.e. predefined schemes for achieving certain standard derivations. As an example one could show that the following is a legal transformation:

Once a general sequence of rules is known for establishing this derivation, one can use it as an abbreviation within proofs. However, while proofs become more readable when using good lemmas, it can also make the process of derivation more complicated, since there are more possible choices to be taken into account. This is especially important when using proof theory (as often desired) for automated deduction.

[edit] Structural rules

The structural rules deserve some additional discussion. The names of the rules are Weakening (W), Contraction (C), and Permutation (P).

Weakening allows the addition of arbitrary elements to a sequence. Intuitively, this is allowed in the antecedent because we can always add assumptions to our proof. It is allowed in the succedent because the succedent is a disjunction of elements, so only one need be provable at a time, and we can add additional unproven propositions.

Contraction and Permutation assure that neither the order (P) nor the multiplicity of occurrences (C) of elements of the sequences matters. Thus, one could instead of sequences also consider sets.

The extra effort of using sequences, however, is justified since part or all of the structural rules may be omitted. Doing so, one obtains the so-called substructural logics.

[edit] Properties of the system LK

This system of rules can be shown to be both sound and complete with respect to first-order logic, i.e. a statement A follows semantically from a set of premises Γ ( ) iff the sequent

) iff the sequent  can be derived by the above rules.

can be derived by the above rules.

In the sequent calculus, the rule of cut is admissible. This result is also referred to as Gentzen's Hauptsatz ("Main Theorem").

[edit] Variants

The above rules can be modified in various ways:

[edit] Minor structural alternatives

There is some freedom of choice regarding the technical details of how sequents and structural rules are formalized. As long as every derivation in LK can be effectively transformed to a derivation using the new rules and vice versa, the modified rules may still be called LK.

First of all, as mentioned above, the sequents can be viewed to consist of sets or multisets. In this case, the rules for permuting and (when using sets) contracting formulae are obsolete.

The rule of weakening will become admissible, when the axiom (I) is changed, such that any sequent of the form  can be concluded. This means that A proves A in any context. Any weakening that appears in a derivation can then be performed right at the start. This may be a convenient change when constructing proofs bottom-up.

can be concluded. This means that A proves A in any context. Any weakening that appears in a derivation can then be performed right at the start. This may be a convenient change when constructing proofs bottom-up.

Independent of these one may also change the way in which contexts are split within the rules: In the cases (∧R), (∨L), and (→L) the left context is somehow split into Γ and Σ when going upwards. Since contraction allows for the duplication of these, one may assume that the full context is used in both branches of the derivation. By doing this, one assures that no important premises are lost in the wrong branch. Using weakening, the irrelevant parts of the context can be eliminated later.

[edit] Substructural logics

Alternatively, one may restrict or forbid the use of some of the structural rules. This yields a variety of substructural logic systems. They are generally weaker than LK (i.e., they have fewer theorems), and thus not complete with respect to the standard semantics of first-order logic. However, they have other interesting properties that have led to applications in theoretical computer science and artificial intelligence.

[edit] Intuitionistic sequent calculus: System LJ

Surprisingly, some small changes in the rules of LK suffice in order to turn it into a proof system for intuitionistic logic. To this end, one has to restrict to intuitionistic sequents (i.e., sequents with exactly one formula on the right-hand side) and modify the rule (∨L) as follows:

where C is an arbitrary formula.

The resulting system is called LJ. It is sound and complete with respect to intuitionistic logic and admits a similar cut-elimination proof.

(Beware that though the judgments of LJ look like those of natural deduction, the character of the entire system is completely different because its inference rules are restricted versions of the symmetric LK rules rather than the inherently asymmetric rules of natural deduction).

[edit] References

- Girard, Jean-Yves; Paul Taylor, Yves Lafont (1990) [1989]. Proofs and Types. Cambridge University Press (Cambridge Tracts in Theoretical Computer Science, 7). ISBN 0-521-37181-3. http://www.cs.man.ac.uk/~pt/stable/Proofs+Types.html.

- ^ Here, "whenever" is used as an informal abbreviation "for every assignment of values to the free variables in the judgment"

- ^ Gentzen, Gerhard (1934-1935). "Untersuchungen über das logische Schließen". Mathematische Zeitschrift 39: 405–431. doi:.

![\cfrac{\Gamma, A[t] \vdash \Delta}{\Gamma, \forall x A[x/t] \vdash \Delta} \quad ({\forall}L)](http://upload.wikimedia.org/math/1/6/2/162cf7be3ca1941f2e9fea347cd996dd.png)

![\cfrac{\Gamma \vdash A[y], \Delta}{\Gamma \vdash \forall x A[x/y], \Delta} \quad ({\forall}R)](http://upload.wikimedia.org/math/9/e/4/9e40fefc2506bd2ca4bd4364e8711262.png)

![\cfrac{\Gamma, A[y] \vdash \Delta}{\Gamma, \exist x A[x/y] \vdash \Delta} \quad ({\exist}L)](http://upload.wikimedia.org/math/5/c/c/5cceb9944fc3539ed9e4fe3b20c249e2.png)

![\cfrac{\Gamma \vdash A[t], \Delta}{\Gamma \vdash \exist x A[x/t], \Delta} \quad ({\exist}R)](http://upload.wikimedia.org/math/6/b/8/6b85be398df03b264eba6983ec417e66.png)