Binary logarithm

From Wikipedia, the free encyclopedia

In mathematics, the binary logarithm (log2 n) is the logarithm for base 2. It is the inverse function of  .

.

Contents |

[edit] Applications

[edit] Information theory

The binary logarithm is often used in computer science and information theory (where it is frequently written lg n, or ld n, from Latin logarithmus dualis [although the ISO specification is that it should be lb (n), lg (n) being reserved for log10 n] ), because it is closely connected to the binary numeral system. The number of digits (bits) in the binary representation of a positive integer n is the integral part of 1 + lg n, i.e.

In information theory, the definition of the amount of self-information and information entropy involves the binary logarithm; this is needed because the unit of information, the bit, refers to information resulting from an occurrence of one of two equally probable alternatives.

[edit] Computational complexity

The binary logarithm also frequently appears in the analysis of algorithms. If a number n greater than 1 is divided by 2 repeatedly, the number of iterations needed to get a value at most 1 is again the integral part of lg n. This idea is used in the analysis of several algorithms and data structures. For example, in binary search, the size of the problem to be solved is halved with each iteration, and therefore roughly lg n iterations are needed to obtain a problem of size 1, which is solved easily in constant time. Similarly, a perfectly balanced binary search tree containing n elements has height lg n+1.

However, the running time of an algorithm is usually expressed in big O notation, ignoring constant factors. Since log2 n = (1/logk 2)logk n, where k can be any number greater than 1, algorithms that run in O(log2 n) time can also be said to run in, say, O(log13 n) time. The base of the logarithm in expressions such as O(log n) or O(n log n) is therefore not important. In other contexts, though, the base of the logarithm needs to be specified. For example O(2lg n) is not the same as O(2ln n) because the former is equal to O(n) and the latter to O(n0.6931...).

Algorithms with running time n lg n are sometimes called linearithmic. Some examples of algorithms with running time O(lg n) or O(n lg n) are:

[edit] Using calculators

An easy way to calculate the log2(n) on calculators that do not have a log2-function is to use the natural logarithm "ln" or the common logarithm "log" functions, which are found on most "scientific calculators". The formulae for this are:

- log2(n) = ln(n)/ln(2) = log(n)/log(2)

so

- log2(n) = loge(n)×1.442695... = log10(n)×3.321928...

and this produces the curiosity that log2(n) is within 0.6% of loge(n)+log10(n). loge(n)+log10(n) is actually log2.008135929...(n) where the base is elog10e(10)

[edit] Algorithm

[edit] Integer

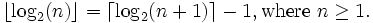

For integer domain and range, the binary logarithm can be computed rounding up, or rounding down. These two forms of integer binary logarithm are related by this formula:

The following example code in the C language computes the binary logarithm (rounding down) of an integer, rounded down. [2] The operator '>>' represents 'unsigned right shift'. The rounding down form of binary logarithm is identical to computing the position of the most significant 1 bit.

/** * Returns the floor form of binary logarithm for a 32 bit integer. * -1 is returned if n is 0. */ int floorLog2(unsigned int n) { int pos = 0; if (n >= 1<<16) { n >>= 16; pos += 16; } if (n >= 1<< 8) { n >>= 8; pos += 8; } if (n >= 1<< 4) { n >>= 4; pos += 4; } if (n >= 1<< 2) { n >>= 2; pos += 2; } if (n >= 1<< 1) { pos += 1; } return ((n == 0) ? (-1) : pos); }

[edit] Real number

For a general real number, the binary logarithm may be computed in two parts:

- Compute the integer part,

- Compute the fractional part

Computing the integral part is straightforward. For any x > 0, there exists a unique integer n such that  , or equivalently

, or equivalently  . Now the integral part of the logarithm is simply n, and the fractional part is lg(2 − nx). In other words:

. Now the integral part of the logarithm is simply n, and the fractional part is lg(2 − nx). In other words:

The fractional part of the result is lgy, and can be computed recursively, using only elementary multiplication and division. To compute the fractional part:

- We start with a real number

.

. - Now square y repeatedly until the result is

. Call the result z, and let m be the number of squarings needed.

. Call the result z, and let m be the number of squarings needed. - Now

and so lgz = 2mlgy. Thus:

and so lgz = 2mlgy. Thus:

- lgy = 2 − m + 2 − mlg(z / 2)

- Notice that z / 2 is once again a real number in the interval [1,2).

- If z / 2 < 1 + ε (where ε is the desired precision), then

and we are done. Otherwise, return to step 1, and compute the binary logarithm of z / 2 using the same method recursively.

and we are done. Otherwise, return to step 1, and compute the binary logarithm of z / 2 using the same method recursively.

The result of all of this is:

After computing  , the error in the result is less than

, the error in the result is less than  .

.

[edit] Example code

The following Python program computes the binary logarithm using the recursive method outlined above, showing the steps along the way:[3]

def lg(x): ip = 0 rem = x # Integer part while rem<1: ip -= 1 rem *= 2 while rem>=2: ip += 1 rem /= 2 print "lg(%g) = %d + lg(%g)" % (x, ip, rem) # Fractional part res = ip + frac_lg(rem) return res def frac_lg(x, fp=1.0, tol=1e-10): assert 1<=x<2 # terminate the recursion if we're close enough if fp<tol: return 0 # square until >= 2 rem = x m = 0 while rem < 2: m += 1 fp /= 2 rem *= rem # now: # rem = x**2**m, fp = 2**-m # => lg(rem) = lg(x)*2**m = lg(x)/fp # => lg(x) = fp * ( lg(rem/2) + 1 ) # = fp + fp*lg(rem/2) # time for recursion! print "lg(x=%g) \t= 2**%d * ( 1 + lg(%g) )" % (x, -m, rem/2) return fp + frac_lg(rem/2, fp) if __name__ == '__main__': value = 4.5 print " X =", value result = lg(value) print "lg(X) =", result # Sample output # # $ python log2.py # X = 4.5 # lg(4.5) = 2 + lg(1.125) # lg(x=1.125) = 2**-3 * ( 1 + lg(1.28289) ) # lg(x=1.28289) = 2**-2 * ( 1 + lg(1.35435) ) # lg(x=1.35435) = 2**-2 * ( 1 + lg(1.68226) ) # lg(x=1.68226) = 2**-1 * ( 1 + lg(1.415) ) # lg(x=1.415) = 2**-1 * ( 1 + lg(1.00111) ) # lg(x=1.00111) = 2**-10 * ( 1 + lg(1.55742) ) # lg(x=1.55742) = 2**-1 * ( 1 + lg(1.21278) ) # lg(x=1.21278) = 2**-2 * ( 1 + lg(1.08166) ) # lg(x=1.08166) = 2**-4 * ( 1 + lg(1.75563) ) # lg(x=1.75563) = 2**-1 * ( 1 + lg(1.54113) ) # lg(x=1.54113) = 2**-1 * ( 1 + lg(1.18753) ) # lg(x=1.18753) = 2**-3 * ( 1 + lg(1.97759) ) # lg(x=1.97759) = 2**-1 * ( 1 + lg(1.95543) ) # lg(x=1.95543) = 2**-1 * ( 1 + lg(1.91185) ) # lg(x=1.91185) = 2**-1 * ( 1 + lg(1.82758) ) # lg(X) = 2.16992500139 #

Since Python does not optimize tail recursion, this can be implemented more efficiently with iteration. Here is an iterative version:

def lg(x, tol=1e-13): res = 0.0 # Integer part while x<1: res -= 1 x *= 2 while x>=2: res += 1 x /= 2 # Fractional part fp = 1.0 while fp>=tol: fp /= 2 x *= x if x >= 2: x /= 2 res += fp return res

[edit] References

- ^ Warren Jr., Henry S. (2002), Hacker's Delight, Addison Wesley, pp. pp. 83, ISBN 978-0201914658

- ^ Warren Jr., Henry S. (2002), Hacker's Delight, Addison Wesley, pp. pp. 215, ISBN 978-0201914658

- ^ adapted from Logarithm Function (Python)

[edit] See also

- natural logarithm (base e),

- common logarithm (base 10)