Data modeling

From Wikipedia, the free encyclopedia

Data modeling in software engineering is the process of creating a data model by applying formal data model descriptions using data modeling techniques.

Contents |

[edit] Overview

Data modeling is a method used to define and analyze data requirements needed to support the business processes of an organization. The data requirements are recorded as a conceptual data model with associated data definitions. Actual implementation of the conceptual model is called a logical data model. To implement one conceptual data model may require multiple logical data models. Data modeling defines the relationships between data elements and structures.[2] Data modeling techniques are used to model data in a standard, consistent, predictable manner in order to manage it as a resource. The use of this standard is strongly recommended for all projects requiring a standard means of defining and analyzing the data resources within an organization. Such projects include:[3]

- incorporating a data modeling technique into a methodology;

- using a data modeling technique to manage data as a resource;

- using a data modeling technique for the integration of information systems;

- using a data modeling technique for designing computer databases.

Data modeling may be performed during various types of projects and in multiple phases of projects. Data models are progressive; there is no such thing as the final data model for a business or application. Instead a data model should be considered a living document that will change in response to a changing business. The data models should ideally be stored in a repository so that they can be retrieved, expanded, and edited over time. Whitten (2004) determined two types of data modeling:[4]

- Strategic data modeling: This is part of the creation of an information systems strategy, which defines an overall vision and architecture for information systems is defined. Information engineering is a methodology that embraces this approach.

- Data modeling during systems analysis: In systems analysis logical data models are created as part of the development of new databases.

Data modeling is also a technique for defining business requirements for a database. It is sometimes called database modeling because a data model is eventually implemented in a database.[4]

[edit] Data modeling topics

[edit] Data models

Data models support data and computer systems by providing the definition and format of data. If this is done consistently across systems then compatibility of data can be achieved. If the same data structures are used to store and access data then different applications can share data. The results of this are indicated above. However, systems and interfaces often cost more than they should, to build, operate, and maintain. They may also constrain the business rather than support it. A major cause is that the quality of the data models implemented in systems and interfaces is poor.[1]

- Business rules, specific to how things are done in a particular place, are often fixed in the structure of a data model. This means that small changes in the way business is conducted lead to large changes in computer systems and interfaces.

- Entity types are often not identified, or incorrectly identified. This can lead to replication of data, data structure, and functionality, together with the attendant costs of that duplication in development and maintenance.

- Data models for different systems are arbitrarily different. The result of this is that complex interfaces are required between systems that share data. These interfaces can account for between 25-70% of the cost of current systems.

- Data cannot be shared electronically with customers and suppliers, because the structure and meaning of data has not been standardised. For example, engineering design data and drawings for process plant are still sometimes exchanged on paper.

The reason for these problems is a lack of standards that will ensure that data models will both meet business needs and be consistent.[1]

[edit] Conceptual, logical and physical schemes

A data model instance may be one of three kinds according to ANSI in 1975[5]:

- Conceptual schema : describes the semantics of a domain, being the scope of the model. For example, it may be a model of the interest area of an organization or industry. This consists of entity classes, representing kinds of things of significance in the domain, and relationships assertions about associations between pairs of entity classes. A conceptual schema specifies the kinds of facts or propositions that can be expressed using the model. In that sense, it defines the allowed expressions in an artificial 'language' with a scope that is limited by the scope of the model.

- Logical schema : describes the semantics, as represented by a particular data manipulation technology. This consists of descriptions of tables and columns, object oriented classes, and XML tags, among other things.

- Physical schema : describes the physical means by which data are stored. This is concerned with partitions, CPUs, tablespaces, and the like.

The significance of this approach, according to ANSI, is that it allows the three perspectives to be relatively independent of each other. Storage technology can change without affecting either the logical or the conceptual model. The table/column structure can change without (necessarily) affecting the conceptual model. In each case, of course, the structures must remain consistent with the other model. The table/column structure may be different from a direct translation of the entity classes and attributes, but it must ultimately carry out the objectives of the conceptual entity class structure. Early phases of many software development projects emphasize the design of a conceptual data model. Such a design can be detailed into a logical data model. In later stages, this model may be translated into physical data model. However, it is also possible to implement a conceptual model directly.

[edit] Data modeling process

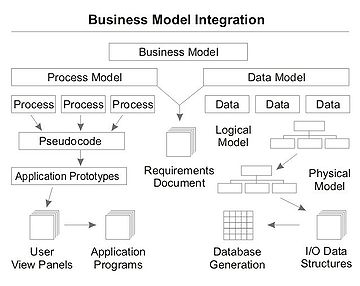

In the context of Business Process Integration, see figure, data modeling will result in database generation. It complements business process modeling, which results in application programs to support the business processes.[6]

The actual database design is the process of producing a detailed data model of a database. This logical data model contains all the needed logical and physical design choices and physical storage parameters needed to generate a design in a Data Definition Language, which can then be used to create a database. A fully attributed data model contains detailed attributes for each entity. The term database design can be used to describe many different parts of the design of an overall database system. Principally, and most correctly, it can be thought of as the logical design of the base data structures used to store the data. In the relational model these are the tables and views. In an Object database the entities and relationships map directly to object classes and named relationships. However, the term database design could also be used to apply to the overall process of designing, not just the base data structures, but also the forms and queries used as part of the overall database application within the Database Management System or DBMS.

In the process system interfaces account for 25% to 70% of the development and support costs of current systems. The primary reason for this cost is that these systems do not share a common data model. If data models are developed on a system by system basis, then not only is the same analysis repeated in overlapping areas, but further analysis must be performed to create the interfaces between them. Most systems contain the same basic components, redeveloped for a specific purpose. For instance the following can use the same basic classification model as a component:[1]

- Materials Catalogue,

- Product and Brand Specifications,

- Equipment specifications.

The same components are redeveloped because we have no way of telling they are the same thing.

[edit] Modeling methodologies

Data models represent information areas of interest. While there are many ways to create data models, according to Len Silverston (1997)[7] only two modeling methodologies stand out, top-down and bottom-up:

- Bottom-up models are often the result of a reengineering effort. They usually start with existing data structures forms, fields on application screens, or reports. These models are usually physical, application-specific, and incomplete from an enterprise perspective. They may not promote data sharing, especially if they are built without reference to other parts of the organization.[7]

- Top-down logical data models, on the other hand, are created in an abstract way by getting information from people who know the subject area. A system may not implement all the entities in a logical model, but the model serves as a reference point or template.[7]

Sometimes models are created in a mixture of the two methods: by considering the data needs and structure of an application and by consistently referencing a subject-area model. Unfortunately, in many environments the distinction between a logical data model and a physical data model is blurred. In addition, some CASE tools don’t make a distinction between logical and physical data models.[7]

[edit] Entity relationship diagrams

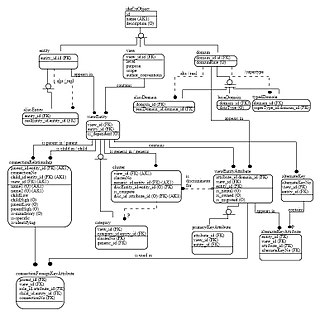

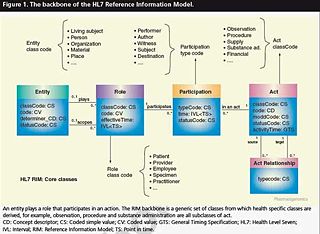

There are several notations for data modeling. The actual model is frequently called "Entity relationship model", because it depicts data in terms of the entities and relationships described in the data.[4] An entity-relationship model (ERM) is an abstract conceptual representation of structured data. Entity-relationship modeling is a relational schema database modeling method, used in software engineering to produce a type of conceptual data model (or semantic data model) of a system, often a relational database, and its requirements in a top-down fashion.

These models are being used in the first stage of information system design during the requirements analysis to describe information needs or the type of information that is to be stored in a database. The data modeling technique can be used to describe any ontology (i.e. an overview and classifications of used terms and their relationships) for a certain universe of discourse i.e. area of interest.

Several techniques have been developed for the design of data models. While these methodologies guide data modelers in their work, two different people using the same methodology will often come up with very different results. Most notable are:

- Bachman diagrams

- Barker's Notation

- Business rules

- Data Vault Modeling

- Entity-relationship model

- Extended Backus–Naur form

- IDEF1X

- Object-relational mapping

- Object Role Modeling

- Relational Model

[edit] Generic data modeling

Generic data models are generalizations of conventional data models. They define standardised general relation types, together with the kinds of things that may be related by such a relation type. The definition of generic data model is similar to the definition of a natural language. For example, a generic data model may define relation types such as a 'classification relation', being a binary relation between an individual thing and a kind of thing (a class) and a 'part-whole relation', being a binary relation between two things, one with the role of part, the other with the role of whole, regardless the kind of things that are related.

Given an extensible list of classes, this allows the classification of any individual thing and to specify part-whole relations for any individual object. By standardisation of an extensible list of relation types, a generic data model enables the expression of an unlimited number of kinds of facts and will approach the capabilities of natural languages. Conventional data models, on the other hand, have a fixed and limited domain scope, because the instantiation (usage) of such a model only allows expressions of kinds of facts that are predefined in the model.

[edit] Semantic data modeling

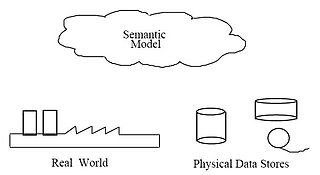

The logical data structure of a DBMS, whether hierarchical, network, or relational, cannot totally satisfy the requirements for a conceptual definition of data because it is limited in scope and biased toward the implementation strategy employed by the DBMS.

Therefore, the need to define data from a conceptual view has led to the development of semantic data modeling techniques. That is, techniques to define the meaning of data within the context of its interrelationships with other data. As illustrated in the figure the real world, in terms of resources, ideas, events, etc., are symbolically defined within physical data stores. A semantic data model is an abstraction which defines how the stored symbols relate to the real world. Thus, the model must be a true representation of the real world.[3]

A semantic data model can be used to serve many purposes, such as:.[3]

- Planning of Data Resources

- Building of Shareable Databases

- Evaluation of Vendor Software

- Integration of Existing Databases

The overall goal of semantic data models is to capture more meaning of data by integrating relational concepts with more powerful abstraction concepts known from the Artificial Intelligence field. The idea is to provide high level modeling primitives as integral part of a data model in order to facilitate the representation of real world situations.[9]

[edit] See also

- Abstraction (computer science)

- Data (computing)

- Data dictionary

- Database design

- Data model

- Document modelling

- Information Management

- Informative Modelling

- Three schema approach

- Zachman framework

[edit] References

![]() This article incorporates public domain material from websites or documents of the National Institute of Standards and Technology.

This article incorporates public domain material from websites or documents of the National Institute of Standards and Technology.

- ^ a b c d e f Matthew West and Julian Fowler (1999). Developing High Quality Data Models. The European Process Industries STEP Technical Liaison Executive (EPISTLE).

- ^ Data Integration Glossary, U.S. Department of Transportation, August 2001.

- ^ a b c d e FIPS Publication 184 released of IDEF1X by the Computer Systems Laboratory of the National Institute of Standards and Technology (NIST). 21 December 1993.

- ^ a b c Whitten, Jeffrey L.; Lonnie D. Bentley, Kevin C. Dittman. (2004). Systems Analysis and Design Methods. 6th edition. ISBN 025619906X.

- ^ American National Standards Institute. 1975. ANSI/X3/SPARC Study Group on Data Base Management Systems; Interim Report. FDT (Bulletin of ACM SIGMOD) 7:2.

- ^ a b Paul R. Smith & Richard Sarfaty (1993). Creating a strategic plan for configuration management using Computer Aided Software Engineering (CASE) tools. Paper For 1993 National DOE/Contractors and Facilities CAD/CAE User's Group.

- ^ a b c d Len Silverston, W.H.Inmon, Kent Graziano (2007). The Data Model Resource Book. Wiley, 1997. ISBN: 0-471-15364-8. Reviewed by Van Scott on tdan.com. Accessed 1 Nov 2008.

- ^ Amnon Shabo (2006). Clinical genomics data standards for pharmacogenetics and pharmacogenomics.

- ^ "Semantic data modeling" In: Metaclasses and Their Application. Book Series Lecture Notes in Computer Science. Publisher Springer Berlin / Heidelberg. Volume Volume 943/1995.

[edit] Further reading

- J.H. ter Bekke (1991). Semantic Data Modeling in Relational Environments

- John Vincent Carlis, Joseph D. Maguire (2001). Mastering Data Modeling: A User-driven Approach.

- Alan Chmura, J. Mark Heumann (2005). Logical Data Modeling: What it is and how to Do it.

- Martin E. Modell (1992). Data Analysis, Data Modeling, and Classification.

- M. Papazoglou, Stefano Spaccapietra, Zahir Tari (2000). Advances in Object-oriented Data Modeling.

- G. Lawrence Sanders (1995). Data Modeling

- Graeme C. Simsion, Graham C. Witt (2005). Data Modeling Essentials'

- Graeme Simsion (2007). Data Modeling: Theory and Practice.

[edit] External links

| Wikimedia Commons has media related to: Data modeling |

- Agile/Evolutionary Data Modeling

- Data Modelling Dictionary

- Data modeling articles

- Database Modelling in UML Article from Methods & Tools

- Data Modeling 101* Semantic data modeling

- System Development, Methodologies and Modeling Notes on by Tony Drewry]

- Request For Proposal - Information Management Metamodel (IMM) of the Object Management Group

- REVER, the data modeling companyThe value of modeling to discover data (relation) in any existing applications

|

||||||||||||||||||||