Normal mapping

From Wikipedia, the free encyclopedia

In 3D computer graphics, normal mapping is an application of the technique known as bump mapping. Normal mapping is sometimes referred to as "Dot3 bump mapping". While bump mapping perturbs the existing normal (the way the surface is facing) of a model, normal mapping replaces the normal entirely. Like bump mapping, it is used to add details to shading without using more polygons. But where a bump map is usually calculated based on a single-channel (interpreted as grayscale) image, the source for the normals in normal mapping is usually a multichannel image (x, y and z channels) derived from a set of more detailed versions of the objects. The values of each channel usually represent the xyz coordinates of the normal in the point corresponding to that texel.

Normal mapping is usually found in two varieties: object-space and tangent-space normal mapping. They differ in coordinate systems in which the normals are measured and stored.

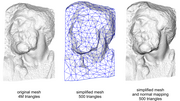

One of the most interesting uses of this technique is to greatly enhance the appearance of a low polygon (popularly known as low poly) model exploiting a normal map coming from a high polygon model. While this idea of taking geometric details from a high polygon model had been introduced in "Fitting Smooth Surfaces to Dense Polygon Meshes" by Krishnamurthy and Levoy, Proc. SIGGRAPH 1996, where this approach was used for creating displacement maps over nurbs, its application to more common triangle meshes came later. In 1998 two papers were presented with the idea of transferring details as normal maps from high to low polygon meshes: "Appearance Preserving Simplification", by Cohen et al. SIGGRAPH 1998, and "A general method for recovering attribute values on simplified meshes" by Cignoni et al. IEEE Visualization '98. The former presented a particular constrained simplification algorithm that during the simplification process tracks how the lost details should be mapped over the simplified mesh. The latter presented a simpler approach that decouples the high and low polygonal mesh and allows the recreation of the lost details in a way that is not dependent on how the low model was created. This latter approach (with some minor variations) is still the one used by most of the currently available tools.

Contents |

[edit] How it works

To calculate the Lambertian (diffuse) lighting of a surface, the unit vector from the shading point to the light source is dotted with the unit vector normal to that surface, and the result is the intensity of the light on that surface. Imagine a polygonal model of a sphere - you can only approximate the shape of the surface. By using a 3-channel bitmap textured across the model, more detailed normal vector information can be encoded. Each channel in the bitmap corresponds to a spatial dimension (X, Y and Z). These spatial dimensions are relative to a constant coordinate system for object-space normal maps, or to a smoothly varying coordinate system (based on the derivatives of position with respect to texture coordinates) in the case of tangent-space normal maps. This adds much more detail to the surface of a model, especially in conjunction with advanced lighting techniques.

[edit] Normal mapping in video games

Interactive normal map rendering was originally only possible on PixelFlow, a parallel rendering machine built at the University of North Carolina at Chapel Hill. It was later possible to perform normal mapping on high-end SGI workstations using multi-pass rendering and framebuffer operations or on low end PC hardware with some tricks using paletted textures. However, with the advent of shaders in personal computers and game consoles, normal mapping became widely used in proprietary commercial video games starting in late 2003, and followed by open source games in later years. Normal mapping's popularity for real-time rendering is due to its good quality to processing requirements ratio versus other methods of producing similar effects. Much of this efficiency is made possible by distance-indexed detail scaling, a technique which selectively decreases the detail of the normal map of a given texture (cf. mipmapping), meaning that more distant surfaces require less complex lighting simulation.

Basic normal mapping can be implemented in any hardware that supports palletized textures. The first game console to have specialized normal mapping hardware was the Sega Dreamcast. However, Microsoft's Xbox was the first console to widely use the effect on in retail games. Out of the sixth generation consoles, only the Playstation 2's GPU lacks built-in normal mapping support. Games for the Xbox 360 and the PlayStation 3 rely heavily on normal mapping and are beginning to implement parallax mapping.

[edit] See also

[edit] External links

- Introduction to Normal Mapping

- Blender Normal Mapping

- Normal Mapping with paletted textures using old OpenGL extensions.

- Normal Map Photography Creating normal maps manually by layering digital photographs

- Normal Mapping Explained

- XNormal A free and very complete normal mapper by Santiago Orgaz

[edit] Bibliography

- Fitting Smooth Surfaces to Dense Polygon Meshes, Krishnamurthy and Levoy, SIGGRAPH 1996

- (PDF) Appearance-Preserving Simplification, Cohen et. al, SIGGRAPH 1998

- (PDF) A general method for recovering attribute values on simplifed meshes, Cignoni et al, IEEE Visualization 1998

- (PDF) Realistic, Hardware-accelerated Shading and Lighting, Heidrich and Seidel, SIGGRAPH 1999