Moving average

From Wikipedia, the free encyclopedia

In statistics, a moving average, also called a rolling average and sometimes a running average, is used to analyze a set of data points by creating a series of averages of different subsets of the full data set. So a moving average is not a single number, but it is a set of numbers, each of which is the average of the corresponding subset of a larger set of data points. A simple example is if you had a data set with 100 data points, the first value of the moving average might be the arithmetic mean (one simple type of average) of data points 1 through 25. The next value would be this simple average of data points 2 through 26, and so forth, until the final value, which would be the same simple average of data points 76 through 100.

The size of the subset being averaged is often constant, as in the previous example, but need not be. In particular, a cumulative average is a type of moving average where each value is the average of all previous data points in the full data set. So the size of the subset being averaged grows by one as each new value of the moving average is calculated. A moving average could also use a weighted average instead of a simple average, perhaps in order to place more emphasis on more recent data points than on other data points longer ago in time.

A moving average can be applied to any data set, but is perhaps most commonly used with time series data to smooth out short-term fluctuations and highlight longer-term trends or cycles. The threshold between short-term and long-term depends on the application, and the parameters of the moving average will be set accordingly. For example, it is often used in technical analysis of financial data, like stock prices, returns or trading volumes. It is also used in economics to examine gross domestic product, employment or other macroeconomic time series. Mathematically, a moving average is a type of convolution and so it is also similar to the low-pass filter used in signal processing. When used with non-time series data, a moving average simply acts as a generic smoothing operation without any specific connection to time, although typically some kind of ordering is implied.

Contents |

[edit] Simple moving average

[edit] Prior moving average

A simple moving average (SMA) is the unweighted mean of the previous n data points. For example, a 10-day simple moving average of closing price is the mean of the previous 10 days' closing prices. If those prices are  then the formula is

then the formula is

When calculating successive values, a new value comes into the sum and an old value drops out, meaning a full summation each time is unnecessary,

In technical analysis there are various popular values for n, like 10 days, 40 days, or 200 days. The period selected depends on the kind of movement one is concentrating on, such as short, intermediate, or long term. In any case moving average levels are interpreted as support in a rising market, or resistance in a falling market.

In all cases a moving average lags behind the latest data point, simply from the nature of its smoothing. An SMA can lag to an undesirable extent, and can be disproportionately influenced by old data points dropping out of the average. This is addressed by giving extra weight to more recent data points, as in the weighted and exponential moving averages.

One characteristic of the SMA is that if the data have a periodic fluctuation, then applying an SMA of that period will eliminate that variation (the average always containing one complete cycle). But a perfectly regular cycle is rarely encountered in economics or finance.[1]

[edit] Central moving average

For a number of applications it is advantageous to avoid the shifting induced by using only 'past' data. Hence a central moving average can be computed, using both 'past' and 'future' data. The 'future' data in this case are not predictions, but merely data obtained after the time at which the average is to be computed.

Weighted and exponential moving averages[citation needed] (see below) can also be computed centrally.

[edit] Cumulative moving average

The cumulative moving average is also frequently called a running average or a long running average although the term running average is also used as synonym for a moving average. This article uses the term cumulative moving average or simply cumulative average since this term is more descriptive and unambiguous.

In some data acquisition systems, the data arrives in an ordered data stream and the stastitician would like to get the average of all of the data up until the current data point. For example, an investor may want the average price of all of the stock transactions for a particular stock up until the current time. As each new transaction occurs, the average price at the time of the transaction can be calculated for all of the transactions up to that point using the cumulative average. This is the cumulative average, which is typically an unweighted average of the sequence of i values x1, ..., xi up to the current time:

The brute force method to calculate this would be to store all of the data and calculate the sum and divide by the number of data points every time a new data point arrived. However, it is possible to simply update cumulative average as a new value xi+1 becomes available, using the formula:

where CA0 can be taken to be equal to 0.

Thus the current cumulative average for a new data point is equal to the previous cumulative average plus the difference between the latest data point and the previous average divided by the number of points received so far. When all of the data points arrive (i = N), the cumulative average will equal the final average.

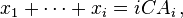

The derivation of the cumulative average formula is straightforward. Using

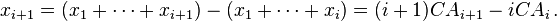

and similarly for i + 1, it is seen that

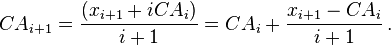

Solving this equation for CAi+1 results in:

[edit] Weighted moving average

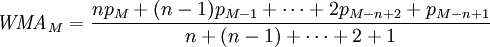

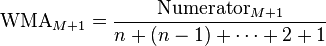

A weighted average is any average that has multiplying factors to give different weights to different data points. Mathematically, the moving average is the convolution of the data points with a moving average function; in technical analysis, a weighted moving average (WMA) has the specific meaning of weights which decrease arithmetically. In an n-day WMA the latest day has weight n, the second latest n − 1, etc, down to zero.

The denominator is a triangle number, and can be easily computed as

When calculating the WMA across successive values, it can be noted the difference between the numerators of WMAM+1 and WMAM is npM+1 − pM − ... − pM−n+1. If we denote the sum pM + ... + pM−n+1 by TotalM, then

The graph at the right shows how the weights decrease, from highest weight for the most recent data points, down to zero. It can be compared to the weights in the exponential moving average which follows.

[edit] Exponential moving average

An exponential moving average (EMA), sometimes also called an exponentially weighted moving average (EWMA), applies weighting factors which decrease exponentially. The weighting for each older data point decreases exponentially, giving much more importance to recent observations while still not discarding older observations entirely. The graph at right shows an example of the weight decrease.

The degree of weighing decrease is expressed as a constant smoothing factor α, a number between 0 and 1. α may be expressed as a percentage, so a smoothing factor of 10% is equivalent to α = 0.1. Alternatively, α may be expressed in terms of N time periods, where  . For example, N=19 is equivalent to α = 0.1. The half-life of the weights (the interval over which the weights decrease by a factor of two) is approximately

. For example, N=19 is equivalent to α = 0.1. The half-life of the weights (the interval over which the weights decrease by a factor of two) is approximately  (within 1% if N>5).

(within 1% if N>5).

The observation at a time period t is designated Yt, and the value of the EMA at any time period t is designated St. S1 is undefined. S2 may be initialized in a number of different ways, most commonly by setting S2 to Y1, though other techniques exist, such as setting S2 to an average of the first 4 or 5 observations. The prominence of the S2 initialization's effect on the resultant moving average depends on α; smaller α values make the choice of S2 relatively more important than larger α values, since a higher α discounts older observations faster.

The formula for calculating the EMA at time periods t > 2 is

This formulation is according to Hunter (1986)[2]. The weights will obey α(1 − α)xYt − (x + 1). An alternate approach by Roberts (1959) uses Yt in lieu of Yt−1[3]:

This formula can also be expressed in technical analysis terms as follows, showing how the EMA steps towards the latest data point, but only by a proportion of the difference (each time):[4]

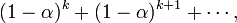

Expanding out EMAyesterday each time results in the following power series, showing how the weighting factor on each data point p1, p2, etc, decrease exponentially:

In theory this is an infinite sum, but because 1 − α is less than 1, the terms become smaller and smaller, and can be ignored once small enough. The denominator approaches 1/α, and that value can be used instead of adding up the powers, provided one is using enough terms that the omitted portion is negligible.

The N periods in an N-day EMA only specify the α factor. N is not a stopping point for the calculation in the way it is in an SMA or WMA. The first N data points in an EMA represent about 86% of the total weight in the calculation[citation needed].

The power formula above gives a starting value for a particular day, after which the successive days formula shown first can be applied.

The question of how far back to go for an initial value depends, in the worst case, on the data. If there are huge p price values in old data then they'll have an effect on the total even if their weighting is very small. If one assumes prices don't vary too wildly then just the weighting can be considered. The weight omitted by stopping after k terms is

which is

i.e. a fraction

out of the total weight.

For example, to have 99.9% of the weight,

terms should be used. Since  approaches

approaches

as N increases, this simplifies to approximately

- k = 3.45(N + 1)

for this example (99.9% weight).

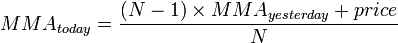

[edit] Modified Moving Average

This is called modified moving average (MMA), running moving average (RMA), or smoothed moving average.

Definition

In short, this is exponential moving average, which  .

.

[edit] Application of exponential moving average to OS performance metrics

Some computer performance metrics use a form of exponential moving average, for example, the average process queue length, or the average CPU utilization.

Here α is defined as a function of time between two readings. An example of a coefficient giving bigger weight to the current reading, and smaller weight to the older readings is

where time for readings tn is expressed in seconds, and W is the period of time in minutes over which the reading is said to be averaged (the mean lifetime of each reading in the average). Given the above definition of α, the moving average can be expressed as

For example, a 15-minute average L of a process queue length Q, measured every 5 seconds (time difference is 5 seconds), is computed as

[edit] Other weightings

Other weighting systems are used occasionally – for example, in share trading a volume weighting will weight each time period in proportion to its trading volume.

A further weighting, used by actuaries, is Spencer's 15-Point Moving Average[5] (a central moving average).

[edit] See also

[edit] Notes and references

- ^ Statistical Analysis, Ya-lun Chou, Holt International, 1975, ISBN 0030894220 , section 17.9.

- ^ NIST/SEMATECH e-Handbook of Statistical Methods: Single Exponential Smoothing at the National Institute of Standards and Technology

- ^ NIST/SEMATECH e-Handbook of Statistical Methods: EWMA Control Charts at the National Institute of Standards and Technology

- ^ Moving Averages page at StockCharts.com

- ^ Spencer's 15-Point Moving Average — from Wolfram MathWorld

[edit] External links

- EWMA in determining network traffic and ethernet

- Discussion of span and shift for prior MA

- Discussion of prior MA and central MA

- Calculation of Moving Average using Excel at spreadsheetml.com

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||