Euclidean algorithm

From Wikipedia, the free encyclopedia

The Euclidean algorithm (also called Euclid's algorithm) is an efficient algorithm for computing the greatest common divisor (GCD) of two numbers. If g represents the GCD(a, b), then g is the largest number that divides both a and b without leaving a remainder. In other words, a and b are both multiples of g, and can be written as a = mg and b = ng, where m and n have no divisor in common. The Euclidean algorithm is the oldest known algorithm and has many theoretical and practical applications, such as modern integer factorization algorithms and the RSA algorithm, a public-key encryption method widely used in electronic commerce.

The Euclidean algorithm is a series of steps in which the GCD(a, b) is related to the GCD of two smaller numbers. In particular, it rests on the fact that the GCD of two numbers remains the same when one is subtracted from the other. If q multiples of b are subtracted from a, the remainder r is divisible by g, because r = mg − qng = (m − qn)g. Although this is true for any integer q, the Euclidean algorithm chooses q to make the remainder r as small as possible. It follows that GCD(a, b) = GCD(b, r), where r is smaller than b.

For illustration, let a = 1989 and b = 867. The Euclidean algorithm begins by subtracting multiples of b from a until the nonnegative remainder r0 is less than b. In this case, q0=2 and r0 = a-q0b = 255, which is less than b.

- GCD(1989, 867) = GCD (867, 255)

In the next step, b takes the place of a, and r0 takes the place of b. The algorithm subtracts multiples of r0 from b until the nonnegative remainder r1 is less than r0. In this case, q1=3 and r1 = b-q1r0 = 102, which is less than r0.

- GCD(1989, 867) = GCD(867, 255) = GCD(255, 102)

The process continues until the remainder is zero, which must happen in a finite number of steps for natural numbers and many other types of numbers. In this case, the final nonzero remainder is g=51, the greatest common divisor.

- GCD(1989, 867) = GCD(867, 255) = GCD(255, 102) = GCD(102, 51) = GCD(51, 0)

The Euclidean algorithm is considered efficient, because the number of steps required increases logarithmically with the size of the two integers. It is never more than five times the number of digits (base 10) in the smaller integer.

The Euclidean algorithm is the oldest known algorithm. (An algorithm is a set of rules for calculating a quantity, together with a proof that the rules always produce the correct result.) The ancient Greek geometer Euclid described it in Books 7 and 10 of his Elements (c. 300 BC), applying it to both natural numbers and real numbers. The algorithm may have been developed centuries before Euclid, possibly by mathematicians in the school of Pythagoras. The algorithm was re-discovered in India and China to aid in solving Diophantine equations (equations that require integer solutions), which occur in many areas of science and mathematics. The algorithm was generalized to other types of numbers, such as algebraic integers, in the 19th century.

The Euclidean algorithm has many theoretical and practical applications. It is a fundamental tool in modern number theory, for example, in proving the unique factorization of a set of numbers or in deriving theorems such as Lagrange's four-square theorem. It can be used to solve various Diophantine equations, such as finding numbers that satisfy multiple congruences (Chinese remainder theorem) or multiplicative inverses of a finite field. By reversing the algorithm, g can be expressed as a linear sum of a and b, that is, g = sa + tb, where s and t are integers (Bézout's identity). The Euclidean algorithm is also used to construct continued fractions, an accurate approximation method, and in the Sturm chain method for finding the real roots of a polynomial. Finally, it can be used to classify commutative rings in modern abstract algebra; a set of numbers are said to form a Euclidean domain, if an analog of Euclid's algorithm can be defined on them.

Contents |

[edit] Background

[edit] Greatest common divisor

The Euclidean algorithm calculates the greatest common divisor (GCD) of two natural numbers a and b. The greatest common divisor g is the largest natural number that divides both a and b without leaving a remainder. The greatest common divisor is often written as GCD(a, b) or more simply, (a, b).[1]

If GCD(a, b) = 1, then a and b are said to be coprime, or relatively prime.[2] This property is independent of whether a and b are themselves prime numbers.[3] For example, neither 6 = 2×3 nor 35 = 5×7 are prime numbers, since they both have two prime factors. Nevertheless, 6 and 35 are coprime; no natural number divides both 6 and 35, since they have no prime factors in common.

Since a and b are both multiples of g, they can be written a = mg and b = ng, and there is no larger number G > g for which this is true. The natural numbers m and n must be coprime, since any common factor q could be factored out of m and n to make g greater. The GCD of any multiple q of the original numbers is q times the original GCD[4]

- GCD (qa, qb) = q GCD(a, b)

More generally, any other number c that divides both a and b must also divide g. Indeed, this property can be taken as the definition of the greatest common divisor,[5] since it implies that g is greater than any other common divisor.

The GCD can be visualized as follows. Imagine a rectangular area a by b covered exactly by square d×d tiles, where d is the side-length of a square tile. Since the area is covered exactly (with no overhang), the number d must divide both a and b leaving no remainder. The greatest common divisor g equals the largest value of d for which this is possible. For illustration, a 24×60 rectangular area can be covered exactly by several types of square tiles: 1×1 tiles, 2×2 tiles, 3×3 tiles, 6×6 tiles or 12×12 tiles. Thus, 12 is the greatest common divisor of 24 and 60.

The GCD of two numbers a and b is the product of the shared prime factors of the two numbers.[6] For example, 1029 can be factored into 3×7×7×7 and 1071 can be factored into 3×3×7×17; hence, the GCD of 1029 and 1071 is 3×7, the product of the prime factors they have in common. If two numbers have no shared prime factors, their greatest common divisor is 1; they are coprime. A key advantage of the Euclidean algorithm is that it can find the GCD efficiently without having to compute the prime factors;[7][8] integer factorization is a difficult problem for large numbers.

The GCD is related to the least common multiple (LCM) by the equation[9]

- GCD(a,b) × LCM(a,b) = a×b

Euclid's algorithm provides an efficient method for computing the LCM, by dividing the product a×b by their GCD. Alternatively, the GCD of a and b may be computed by dividing their product a×b by their LCM.

The GCD and LCM have several common uses, including reducing fractions and finding the lowest common denominator of fractions. For illustration, the fraction 74/111 can be reduced to 2/3 by dividing the numerator and denominator by their GCD, 37. Similarly, the sum of 1/74 + 5/111 can be calculated by converting both terms to a common denominator, 3/222 + 10/222 = 13/222; here, 222 is the LCM of 74 and 111.

[edit] Division algorithm

The Euclidean algorithm requires that, for any two numbers i and j, it is always possible to find a quotient q and remainder r such that

- i = qj + r

where the magnitude of r is strictly less than that of j. The division algorithm ensures that such a quotient and remainder always exist, a necessary condition for the Euclidean algorithm. The division algorithm for natural numbers also states that q and r are unique, but that is not needed for the Euclidean algorithm.

[edit] Description

The Euclidean algorithm consists of a series of steps. Each step k begins with two nonnegative integers i and j, and the goal is to find two new nonnegative integers qk and rk such that

- i = qk j + rk

where rk<j. The two derived numbers qk and rk are called the quotient and the remainder, respectively, of step k.

In the initial step (k=0), the numbers i and j are taken to be the numbers a and b for which the GCD is sought, where one may assume that a>b. In the second step, (k=1), i and j are taken to be b and the remainder r0 of the initial step. In the next and subsequent steps, i and j are taken to be the remainders from the two previous steps, e.g., r0 and r1 for the next step. Thus, the algorithm can be written as a sequence of equations

- a = q0 b + r0

- b = q1 r0 + r1

- r0 = q2 r1 + r2

- r1 = q3 r2 + r3

- ...

- rk−2 = qk rk−1 + rk

If a is not larger than b as assumed, then the first step of the algorithm swaps the numbers. For example, if a<b, the initial quotient q0 equals zero, and the remainder q0 is a. The next step divides the smaller initial number into the larger initial number, exactly as above.

The sequence of equations ends once a remainder rN is zero. The sequence must end in a finite number of steps N, because every remainder is nonnegative and strictly smaller than its predecessor (rk < rk−1); there are a finite number of nonnegative integers between any remainder and zero. The final nonzero remainder rN−1 is the greatest common divisor of a and b, as shown by the following two-step argument.

In the first step, rN−1 divides both a and b. To demonstrate this, rN−1 divides its predecessor rN−2

- rN−2 = qN rN−1

since the final remainder rN is zero, by definition. It follows that rN−1 divides its next predecessor rN−3

- rN−3 = qN−1 rN−2 + rN−1

because it divides both terms on the right-hand side of the equation. Iterating the same argument, rN−1 divides all the preceding remainders, including a and b. None of the preceding remainders rk divide a and b, since they leave a remainder. Since rN−1 is a common divisor of a and b, it is smaller than or equal to the greatest common divisor: rN−1 ≤ g.

In the second step, any natural number c that divides both a and b (in other words, any common divisor of a and b) divides the remainders rk. By definition, a and b can be written as multiples of c: a = mc and b = nc, where m and n are natural numbers. Therefore, c divides the initial remainder r0, since r0 = a − q0b = mc − q0nc = (m − q0n)c. An analogous argument shows that c also divides the higher remainders r1, r2, etc. and, in particular, the final nonzero remainder rN−1. Therefore, the greatest common divisor g must divide rN−1, which implies that g ≤ rN−1. Since the first part of the argument showed the reverse, that rN−1 ≤ g, it follows that g = rN−1. Thus, g is the greatest common divisor of all the succeeding pairs[10]

- g = GCD(a, b) = GCD(b, r0) = GCD(r0, r1) = ... = GCD(rN−3, rN−2) = GCD(rN−2, rN−1) = rN−1

This chain of equations is the basis for a recursive implementation of Euclid's algorithm.[11]

[edit] Visualization

The Euclidean algorithm can be visualized in terms of the rectangle-tiling analogy given above for the greatest common divisor. Assume that we wish to cover an a-by-b rectangle with square tiles exactly, where a is the larger of the two numbers. We first attempt to tile the rectangle using b-by-b square tiles; however, this leaves an r0-by-b residual rectangle untiled, where r0<b. We then attempt to tile the residual rectangle with r0-by-r0 square tiles. This leaves a second residual rectangle r1-by-r0, which we attempt to tile using r0-by-r0 square tiles, and so on. The sequence ends when there is no residual rectangle, i.e., when the square tiles cover the previous residual rectangle exactly.

[edit] Calculating the quotients and remainders

-

See also: Modulo operation and Division algorithm

The basic task in the Euclidean algorithm is to compute the quotient and remainder

- i = qk j + rk

for a given pair of nonnegative integers, i and j. Euclid solves this problem by repeated subtraction; that is, he subtracts j from i iteratively until the remainder rk is smaller than j. This approach is guaranteed to work in advanced number systems such as commutative rings, where the only defined operations are addition, subtraction and multiplication.

Modern computers have more efficient methods for computing the quotient and remainder of two integers. The usual approach is to use integer division and the modulo operation to calculate the quotient and remainder, respectively. The modulo operation gives the remainder after dividing two numbers; thus,

- rk ≡ i mod j

Computational efficiency can also be gained by defining the algorithm recursively, as described below.

[edit] Worked example

The Euclidean algorithm can be used to find the greatest common divisor of the natural numbers 1071 and 1029, which is 21. To begin, multiples of 1029 are subtracted from 1071 until the remainder is less than 1029. Only one such multiple can be subtracted, leaving a remainder of 42

- 1071 = 1×1029 + 42

Then multiples of 42 are subtracted from 1029 until the remainder is less than 42. Twenty-four multiples can be subtracted, leaving a remainder of 21

- 1029 = 24×42 + 21

Then multiples of 21 are subtracted from 42 until the remainder is less than 21. Two multiples can be subtracted, leaving no remainder

- 42 = 2×21 + 0

Since the last remainder is zero, the algorithm ends and 21 is the greatest common divisor of 1071 and 1029.

To relate this example to the general description above, the steps can be written as

| Step | Equation | Quotient and remainder |

|---|---|---|

| 0 | 1071 = q0 1029 + r0 | q0 = 1 and r0 = 42 |

| 1 | 1029 = q1 r0 + r1 | q1 = 24 and r1 = 21 |

| 2 | r0 = q2 r1 + r2 | q2 = 2 and r2 = 0; algorithm ends |

[edit] Implementations

Implementations of the algorithm may be expressed in pseudocode. For example, the division-based version may be programmed as

function gcd(a, b)

while b ≠ 0

t := b

b := a mod b

a := t

return a

At the end of each loop iteration, the variable b holds the latest remainder rk, whereas the variable a holds the previous remainder, rk−1. The dummy variable t merely holds the value of rk−1 while the new rk is being calculated.

For comparison, the subtraction-based version defined by Euclid would be written as

function gcd(a, b)

if a = 0 return b

while b ≠ 0

if a > b

a := a − b

else

b := b − a

return a

The integer division step is replaced by repeated subtraction.

The recursive version is briefer than both

function gcd(a, b)

if b = 0 return a

else return gcd(b, a mod b)

This recursion is best illustrated by a worked example. The GCD(1071,1029) is calculated from the equivalent GCD(1029, 1071 mod 1029) = GCD(1029, 42). This in turn is calculated from the equivalent GCD(42, 1029 mod 42) = GCD(42, 21). This in turn is calculated from the equivalent GCD(21, 42 mod 21) = GCD(21, 0) = 21. This approach is well-suited to computers, which generally maintain a stack of function calls.

[edit] Method of least absolute remainders

In another version of Euclid's algorithm, the quotient at each step is increased by one if the resulting negative remainder is smaller in absolute value than the typical positive remainder.[12][13] Previously, one solved the equation

- rk−2 = qk rk−1 + rk

assuming that rk−1 > rk > 0. Assuming that rk−1 is positive, an alternative negative remainder sk can be computed

- rk−2 = (qk + 1) rk−1 + sk

If |sk| < |rk|, then rk is replaced by sk. As shown by Leopold Kronecker, this version requires the fewest number of steps of any version of Euclid's algorithm.[12][13]

[edit] Applications

[edit] Bézout's identity

Bézout's identity states that the greatest common divisor g can be represented as a linear sum of the original two numbers, a and b. In other words, it is possible to find integers s and t such that g = sa + tb.

The integers s and t can be calculated from the quotients q0, q1, etc. by reversing the order of equations in Euclid's algorithm. Beginning with the next-to-last equation, g can be expressed in terms of the quotient qN−1 and the two preceding remainders, rN−2 and rN−3.

- g = rN−1 = rN−3 − qN−1 rN−2

Those two remainders can be likewise expressed in terms of their quotients and preceding remainders,

- rN−2 = rN−4 − qN−2 rN−3

- rN−3 = rN−5 − qN−3 rN−4

Substituting these formulae for rN−2 and rN−3 into the first equation yields g as a linear sum of the remainders rN−4 and rN−5. The process of substituting remainders by formulae involving their predecessors can be continued until the original numbers a and b are reached

- r2 = r0 − q2 r1

- r1 = b − q1 r0

- r0 = a − q0 b

After all the remainders r0, r1, etc. have been substituted, the final equation expresses g as a linear sum of a and b: g = sa + tb.

Bézout's identity provides yet another definition of the greatest common divisor g of two numbers a and b. Consider the set of all numbers sa + tb, where s and t are integers. By the argument above, every member of the set is an integer multiple of g; consequently, g has the smallest absolute value of any member of the set. This definition is helpful in considering more sophisticated number fields,[14] since it provides a link between the Euclidean algorithm and the concept of a principal ideal.

[edit] Extended Euclidean algorithm

The integers s and t of Bézout's identity can be computed efficiently using the extended Euclidean algorithm. The sequence of equations of Euclid's algorithm

- a = q0 b + r0

- b = q1 r0 + r1

- ...

- rk−2 = qk rk−1 + rk

- ...

- rN−2 = qN rN−1 + 0

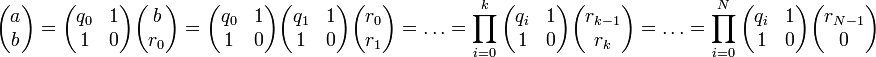

can be written in a compact matrix form[15]

Let M represent the product of all the quotient matrices

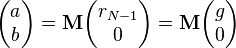

The matrix M can be calculated efficiently, with two multiplications and two additions per step of the Euclidean algorithm. This simplifies the Euclidean algorithm to the form

since the final nonzero remainder rN−1 is the greatest common divisor g.

To express g as a linear sum of a and b, both sides of this equation can be multiplied by the inverse of the matrix M. The inverse is well-defined, since the determinant of M is not zero. Rather, it equals (-1)N+1, since it equals the product of the determinants of the quotient matrices, each of which is negative one. Therefore, the vector of the final remainders can be solved using the inverse of M

Since the top equation gives

- g = (-1)N+1 ( m22 a - m12 b)

the two integers of Bézout's identity are s = (-1)N+1m22 and t = (-1)Nm12.

[edit] Euclid's lemma and unique factorization

Bézout's identity is essential to many higher applications of Euclid's algorithm, such as demonstrating the unique factorization of numbers into prime factors. To illustrate this, suppose that a number L can be written as a product of two factors u and v, that is, L = uv. If another number w also divides L but is coprime with u, then w must divide v. For if the greatest common divisor of u and w is 1, then integers s and t can be found such that

- 1 = su + tw

by Bézout's identity. Multiplying both sides by v gives the relation

- v = suv + twv = sL + twv

Since w divides both terms on the right-hand side, it must also divide the left-hand side, v. This result is known as Euclid's lemma.[16] Specifically, if a prime number p divides L, it must divide at least one factor of L. Conversely, if a number w is coprime to each of a series of numbers a1, a2, ..., an, then w is also coprime to their product, a1 × a2 × ... × an.[17]

To prove unique factorization, assume the contrary, namely that there are two independent factorizations of L into m and n prime factors, respectively

- L = p1p2...pm = q1q2...qn

Since each prime p divides L by assumption, it must also divide one of the q factors; since each q is prime as well, it must be that p=q. Iteratively dividing by the p factors shows that each p has an equal counterpart q; the two prime factorizations are identical except for their order, and hence m=n.

[edit] Linear Diophantine equations

Diophantine equations are equations in which the solutions are restricted to integers; they are named after the third-century Alexandrian mathematician Diophantus. A typical linear Diophantine equation is to solve for an unknown integer x that satisfies an equation in modular arithmetic

- ax ≡ c mod b

This equation asks to find an integer x such that ax equals c, possibly with the addition or subtraction of multiples of b. In other words, the equation seeks to find unknown integers x and y such that[18]

- ax + by = c

where y is an integer. Let g be the greatest common divisor of a and b. Both terms on the left-hand side are divisible by g; therefore, c must also be divisible by g, or the equation has no solutions. By dividing both sides by c/g, the equation can be reduced to Bezout's identity

- sa + tb = g

where s and t can be found by the extended Euclidean algorithm.[19] This provides one solution to the Diophantine equation, x1 = s (c/g) and y1 = t (c/g).

In general, a linear Diophantine equation has no solutions, or an infinite number of solutions. To find the latter, consider two solutions, (x1, y1) and (x2, y2)

- ax1 + by1 = c = ax2 + by2

or equivalently

- a(x1 - x2) = b(y2 - y1)

Therefore, the smallest difference between two x solutions is b/g, whereas the smallest difference between two y solutions is a/g. Thus, the solutions may be expressed as

- x = x1 - bt/g

- y = y1 + at/g

By allowing t to vary over all possible integers, an infinite family of solutions can be generated from a single solution (x1, y1). If the solutions are required to be positive integers (x>0, y>0) , only a finite number of solutions may be possible. This restriction on the acceptable solutions allows systems of Diophantine equations to be solved with more unknowns than equations; this is impossible for a system of linear equations when the solutions can be any real number.

[edit] Multiplicative inverses and the RSA algorithm

A finite field is a set of numbers that obey the usual laws of addition, subtraction, multiplication and division of real numbers, such as commutativity, associativity and distributivity. A simple example of such a finite field is the set of 13 numbers {0, 1, 2, ..., 12} using modular arithmetic. In this field, the results of any mathematical operation (addition/subtraction/multiplication/division) is reduced modulo 13; that is, multiples of 13 are added or subtracted until the result is brought within the range 0-12. For example, the result of 5×7 = 35 mod 13 = 9. Such Galois fields can be defined for any prime p, and are denoted as GF(p); using more sophisticated definitions, they can also be defined for any power m of a prime, GF(pm).

In such a field with m numbers, every element a has a unique multiplicative inverse a−1 such that aa−1 = a−1a ≡ 1 mod m. This inverse can be found by solving the congruence equation ax ≡ 1 mod m,[20] or the equivalent linear Diophantine equation[21]

- ax + my = 1

This equation can be solved by the Euclidean algorithm, as described above. Finding multiplicative inverses is an essential step in the RSA algorithm, which is widely used in electronic commerce; specifically, the equation determines the integer used to decrypt the message.[22]

[edit] Chinese remainder theorem

Euclid's algorithm can also be used to solve multiple linear Diophantine equations. Such equations arise in the Chinese remainder theorem, which describes a novel method to represent an integer x. Instead of representing an integer by its digits, it may be represented by its remainders xi modulo several coprime numbers mi.[23]

- x1 ≡ x mod m1

- x2 ≡ x mod m2

- ...

- xN ≡ x mod mN

The goal is to determine x from its N remainders xi. The solution is to combine the multiple equations into a single linear Diophantine equation with a much larger modulus M that is the product of all the individual moduli mi, and define the Mi

- Mi = M / mi

Thus, each Mi is the product of all the moduli except mi. The solution depends on finding N new numbers hi such that

- Mihi ≡ 1 mod mi

With these numbers hi, any integer x can be reconstructed from its remainders xi by the equation

- x ≡ (x1M1h1 + x2M2h2 + ... + xNMNhN ) mod M

Since these numbers hi are the multiplicative inverses of the Mi, they may be found using Euclid's algorithm as described in the previous subsection.

[edit] Continued fractions

The Euclidean algorithm has a close relationship with continued fractions.[24] The sequence of equations can be written in the form

- a/b = q0 + r0/b

- b/r0 = q1 + r1/r0

- r0/r1 = q2 + r2/r1

- ...

- rk−2/rk−1 = qk + rk/rk−1

- ...

- rN−2/rN-1 = qN

The last term on the right-hand side always equals the inverse of the left-hand side of the next equation. Thus, the first two equations may be combined to form

- a/b = q0 + 1/(q1 + r1/r0)

The third equation may be used to substitute the denominator term r1/r0, yielding

- a/b = q0 + 1/(q1 + 1/(q2 + r2/r1))

The final ratio of remainders rk/rk−1 can always be replaced using the next equation in the series, up to the final equation. The result is a continued fraction

- a/b = q0 + 1/(q1 + 1/(q2 + 1/(... + 1/qN))...) = [q0; q1, q2, ..., qN]

In the worked example above, the GCD(1071, 1029) was calculated, and the quotients qk were 1, 24 and 2, respectively. Therefore, the fraction 1071/1029 may be written

- 1071/1029 = 1 + 1/(24 + 1/2) = [1; 24, 2]

as can be confirmed by calculation.

[edit] Factorization algorithms

Calculating a greatest common divisor is an essential step in several modern factorization algorithms, such as Shor's algorithm, Dixon's factorization method and the Lenstra elliptic curve factorization. The Euclidean algorithm may be used to find this GCD efficiently. Continued fraction factorization uses continued fractions, which are determined using Euclid's algorithm.

[edit] Algorithmic efficiency

Euclid's algorithm is not the only algorithm for identifying the GCD of two integers, but it is among the most efficient. Its computational efficiency can be described in terms of the number of steps it requires, multiplied by the computational expense of each step. As shown first by Gabriel Lamé in 1844,[25] the number of steps required for completion is never more than five times the number h of digits (base 10) of the two numbers. Lamé's worst-case calculation represents the beginning of computational complexity theory.[26] Since the computational expense of each step is also typically of order h, the overall expense grows like h2.

[edit] Number of steps

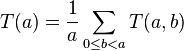

The number of steps to calculate the GCD of two natural numbers, a and b, is usually denoted by T(a, b). If g is the GCD of a and b, then a=mg and b=ng for two coprime numbers m and n. Then

- T(a, b) = T(m, n)

as may be seen by dividing all the steps in the Euclidean algorithm by g.[27] By the same argument, the number of steps remains the same if a and b are multiplied by a common factor w: T(a, b) = T(wa, wb). Therefore, the number of steps T may vary dramatically between neighboring pairs of numbers, such as T(a, b) and T(a, b+1), depending on the size of the two GCDs.

The recursive nature of the Euclidean algorithm gives another equation

- T(a, b) = 1 + T(b, a mod b) = 1 + T(b, r0) = 2 + T(r0, r1) = ... = N + T(rN−2, rN−1) = N + 1

where T(x, 0) = 0 by assumption.[28]

[edit] Worst-case number of steps

If the Euclidean algorithm requires N steps for a pair of natural numbers a>b>0, the smallest values of a and b for which this is true are the Fibonacci numbers FN+2 and FN+1, respectively.[29] This can be shown by induction.[30] If N=1, b divides a with no remainder; the smallest natural numbers for which this is true is b=1 and a=2, which are F2 and F3, respectively. Now assume that the result holds for all values of N up to M-1. The first step of the M-step algorithm is a = q0 b + r0, and the second step is b = q1 r0 + r1. Since the algorithm is recursive, it required M−1 steps to find GCD(b, r0) and their smallest values are FM+1 and FM. The smallest value of a is therefore when q0 = 1, which gives a = b + r0 = FM+1 +FM = FM+2. This proof by Gabriel Lamé was the first practical application of the Fibonacci numbers.[31]

This result suffices to show that the number of steps in Euclid's algorithm can never be more than five times the number of its digits (base 10).[32] For if the algorithm requires N steps, then b is greater than or equal to FN+1 which in turn is greater than or equal to φN, where φ is the golden ratio. Since b > φN, then N < logφ b. Since log10 φ > 1/5, N/5 < log10 φ logφ b = log10 b. Thus, N < log10 b. Thus, the Euclidean algorithm always less than O(h) divisions, where h is the number of digits in the input.

[edit] Average number of steps

The average number of steps taken by the Euclidean algorithm has been defined in several ways. One definition is the average time T(a) required to calculate the GCD of a given number a and a smaller natural number b chosen with equal probability from the integers 0 to a−1[33]

However, since T(a, b) fluctuates dramatically with the GCD of the two numbers, the averaged function T(a) is likewise "noisy".[34]

To reduce this noise, a different average τ(a) is taken over all numbers coprime with a

There are φ(a) coprime integers less than a, where φ is Euler's totient function. This tau average grows steadily with a and is well-approximated by[35]

- τ(a) ≈ (12/π2) ln 2 ln a + C + O(a-(1/6) + ε)

where the constant C≈1.467 is given by

- C = -(1/2) + 6 (ln 2/π2)( 4γ - 24π2ζ'(2) + 3 ln 2 - 2)

where γ is the Euler–Mascheroni constant and ζ' is the derivative of the Riemann zeta function.[36][37] The leading coefficient (12/π2) ln 2 was determined by two independent methods.[38][39]

Since the first average can be calculated from the tau average by summing over the divisors d of a[40]

it can be approximated by the formula[41]

- T(a) = C + (12/π2) ln 2 ( ln a - Σd|a Λ(d)/d )

where Λ(d) is the Mangoldt function.[42]

Yet another average Y(n) is the mean number of steps required when both a and b are chosen randomly (with uniform distribution) from 1 to n

Substituting the approximate formula for T(a) into this equation yields an estimate for Y(n)[43]

- Y(n) ≈ (12/π2) ln 2 ln n + 0.06

[edit] Computational expense per step

In each step k of the Euclidean algorithm, the quotient qk and remainder rk are computed for a given pair of integers i and j

- i = qk j + rk

The computational expense per step is associated chiefly with finding qk, since the remainder rk can be calculated quickly from i, j, and qk

- rk = i − qk j

The computational expense of dividing h-bit numbers scales as O(h(l+1)), where l is the length of the quotient.

For comparison, Euclid's original subtraction-based algorithm can be much slower. A single integer division is equivalent to the quotient q number of subtractions. If the ratio of a and b is very large, the quotient is large and many subtractions will be required. On the other hand, it has been shown that the quotients are very likely to be small integers. In particular, if a and b are successive Fibonacci numbers, Euclid's original algorithm is significantly faster than the usual division-based one.

[edit] Overall quadratic efficiency

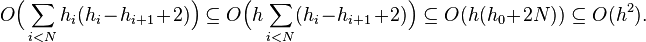

Combining these two results shows that the computational efficiency of Euclid's algorithm grows like h2. Consider the computation of gcd(a,b) where a and b have at most h bits. Let h0, h1, ..., hN−1 represent the number of digits in the successive remainders r0,r1, ..., rN−1. Since N grows linearly with h, the running time is bounded by

[edit] Efficiency of alternative methods

Euclid's algorithm is widely used in practice, especially for small numbers, due to its simplicity. For comparison, the efficiency of alternatives to Euclid's algorithm may be determined.

One inefficient approach to finding the GCD of two natural numbers a and b is to calculate all their common divisors; the GCD is then the largest common divisor. The common divisors can be found by dividing both numbers by successive integers from 2 to the smaller number b. The number of steps of this approach grows linearly with b, or exponentially in the number of digits. Another inefficient approach is to find the prime factors of one or both numbers. The GCD is then the product of the prime factors that divide both numbers. Prime factorization is also inefficient, and likely scales exponentially with the number of digits.

An efficient alternative, the binary GCD algorithm, exploits the binary representation used by computers to avoid divisions and thereby increase efficiency, although it too is O(n²). It merely shrinks the constant hidden by the big-O notation on many real machines.

Additional efficiency can be gleaned by examining only the leading digits of the two numbers a and b. This approach leads to subquadratic integer GCD algorithms, such as those of Schönhage, Knuth and Schönhage, and Stehlé and Zimmermann. All of these algorithms have the same efficiency; they scale as O(n (log n)2 (log log n)).[44][45]

[edit] Other domains of numbers

[edit] Rational and real numbers

Euclid's algorithm can be applied to real numbers, as described by Euclid in Book 10 of his Elements. The goal of the algorithm is to identify a real number g such that two given real numbers, a and b, are integer multiples of it: a = mg and b = ng, where m and n are integers. This identification is equivalent to finding an integer relation among the real numbers a and b; that is, it determines integers s and t such that sa + tb = 0.

The real-number Euclidean algorithm differs from its integer counterpart in two respects. First, all the remainders rk are real numbers, although the quotients qk are integers as before. Second, the algorithm is not guaranteed to end in a finite number N of steps. If it does, the fraction a/b is a rational number, i.e., the ratio of two integers

- a/b = mg/ng = m/n

and can be written as a finite continued fraction [q0; q1, q2, ..., qN]. If the algorithm does not stop, the fraction a/b is an irrational number and can be decribed by an infinite continued fraction [q0; q1, q2, ...]. Examples of infinite continued fractions are the golden ratio φ = [1; 1, 1, ...] and the square root of two, √2 = [1; 2, 2, ...]. Generally speaking, the algorithm is unlikely to stop, since almost all ratios a/b of two real numbers are irrational.

An infinite continued fraction may be truncated at a step k [q0; q1, q2, ..., qk] to yield an approximation to a/b that improves as k is increased. The approximation is described by convergents mk/nk; the numerator and denominators are coprime and obey the recursion

- mk = qk mk−1 + mk−2

- nk = qk nk−1 + nk−2

where m−1 = n−2 = 1 and m−2 = n−1 = 0 are the initial values of the recursion. The convergent mk/nk is the best rational number approximation to a/b with denominator nk

- | (a/b) − (mk/nk) | < 1/nk2

[edit] Polynomials

Polynomials in a single variable x can be added, multiplied and factored into irreducible polynomials, which are the analogs of the prime numbers for integers. The greatest common divisor of two such polynomials is defined as the product of their shared irreducible polynomials, which can be identified using the Euclidean algorithm.[46] For example, consider the following two quartic polynomials, which both factor into two quadratic polynomials

- a(x) = x4 - 4x3 + 4 x2 - 3x + 14 = (x2-5x+7)(x2+x+2)

and

- b(x) = x4 + 8x3 + 12x2 + 17x + 6 = (x2 + 7x + 3)(x2 + x + 2).

From this factorization, the greatest common divisor of the polynomials is x2 + x + 2.

The GCD polynomial of two polynomials, a(x) and b(x), can be found without factorization using the Euclidean algorithm. The basic procedure are similar to integers; at each step, we identify a quotient q(x) and remainder r(x) so that the equations are satisfied

- a(x) = q0(x) b(x) + r0(x)

- b(x) = q1(x) r0(x) + r1(x)

- r0(x) = q2(x) r1(x) + r2(x)

- etc.

where the degree of each remainder is smaller than the degree of its predecessor deg[rk(x)] < deg[rk−1(x)]. Since the degree is a nonnegative integer, and since it decreases with every step, the Euclidean algorithm concludes in a finite number of steps. The final nonzero remainder is the greatest common divisor of the original two polynomials, a(x) and b(x).

In the example above, dividing a(x) by b(x) yields a remainder r0(x) = x3 +\(2/3) x2 + (5/3) x - (2/3). In the next step, b(x) is divided by r0(x) yielding a remainder r1(x) = x2 + x + 2. Finally, dividing r0(x) by r1(x) yields no remainder, indicating that r1(x) is the greatest common divisor polynomial of a(x) and b(x).

Many of the applications described above for integers carry over to polynomials.[47] The Euclidean algorithm can be used to solve linear Diophantine equations and Chinese remainder problems for polynomials; continued fractions of polynomials can also be defined.

The polynomial Euclidean algorithm has other applications as well, such as Sturm chains, a method for counting the number of real roots of a polynomial within a given interval on the real axis. This has applications in several areas, such as the Routh-Hurwitz stability criterion in control theory.

Finally, the coefficients of the polynomials need not be drawn from integers, real numbers or even the complex numbers. For example, the coefficients may be drawn from a general field, such as the finite fields GF(p) described above. The corresponding conclusions about the Euclidean algorithm and its applications hold even for such polynomials.[46]

[edit] Gaussian integers

The Gaussian integers are complex numbers of the form α = u + vi, where u and v are ordinary integers and i is the square root of negative one. By defining an analog of the Euclidean algorithm, Gaussian integers can be shown to be uniquely factorizable, by the argument above.[48] This unique factorization is useful in many applications, such as deriving all Pythagorean triples or proving Fermat's theorem on sums of two squares.[49]

The Euclidean algorithm developed for two Gaussian integers α and β is nearly the same as that for normal integers, but differs in two respects. As before, the basic steps are to identify quotient qk and remainders rk such that

- α = q0β + r0

- β = q1r0 + r1

- r0 = q2r1 + r2

- etc.

where each remainder is smaller than its predecessor. The first difference is that the quotients and remainders are themselves Gaussian integers, and thus are complex numbers. The quotients qk are generally found by rounding the real and complex parts of the exact ratio (such as the complex number α/β) to the nearest integers. The second difference lies in the necessity of defining how one complex remainder can be "smaller" than another. To do this, we define a norm function f(u + vi) = u2 + v2, which converts every Gaussian integer u + vi into a normal integer. After each step k of the Euclidean algorithm, the norm of the remainder f(rk) is smaller than the norm of the preceding remainder, f(rk−1). Since the norm is a nonnegative integer and decreases with every step, the Euclidean algorithm for Gaussian integers ends in a finite number of steps. The final nonzero remainder is the GCD(α,β), the Gaussian integer of largest norm that divides both α and β; it is unique up to multiplication by a unit, ±1 or ±i.

The unique factorization of Gaussian integers permits the computation of all possible Pythagorean triples, which are sets of three integers (a, b, c) that satisfy the Pythagorean theorem

- c2 = a2 + b2

Well-known examples of Pythagorean triples include (3, 4, 5) and (5, 12, 13). The right-hand side may be factored in Gaussian integers

- c2 = a2 + b2 = (a + bi) (a - bi)

Since Gaussian integers factor uniquely, and since the left-hand side c2 is a square, then each of the two factors, a + bi and a - bi, must be squares themselves. Thus, the first factor can be written

- a + bi = (u + vi)2

from which two formulae can be derived

- a = u2 - v2

- b = 2 uv

If these two equations are satisfied, a and b will always form a Pythagorean triple, as may be verified by substitution. Conversely, for any Pythagorean triple, there must be integers u and v such that the two equations are satisfied. Hence, all possible Pythagorean triples are generated from all possible choices of the integers u and v. For illustration, u = 2 and v = 1 yields the Pythagorean triple (3, 4, 5), whereas the choice u=3 and v=2 yields the triple (5, 12, 13).

Many of the other applications of the Euclidean algorithm carry over to Gaussian integers. For example, it can be used to solve linear Diophantine equations and Chinese remainder problems for Gaussian integers; continued fractions of Gaussian integers can also be defined.

[edit] Euclidean domains

A set of elements is called a Euclidean domain if they form a commutative ring and if an analog of Euclid's algorithm can be performed on them. Any number from a Euclidean domain can be factored uniquely into irreducible elements, and thus, any Euclidean domain is a unique factorization domain (UFD); but the converse isn't true. The Euclidean domains are a subset of the GCD domains, domains in which a greatest common divisor of two numbers always exists; in other words, a greatest common divisor may exist (for all elements in a domain), although it may not be possible to find it using a Euclidean algorithm. A Euclidean domain is always a principal ideal domain (PID), an integral domain in which every ideal is a principal ideal. However, again, the converse is not true; not every PID is a Euclidean domain.

For Euclidean domains R, Euclid's algorithm is generalized as follows. A generalized Euclidean algorithm requires a mapping f of R into nonnegative integers such that, for any two nonzero elements a and b of the domain, there exist q and r in R such that a = qb + r and f(r) < f(b). This mapping corresponds to the norm function used to order the Gaussian integers above. The function f can be the magnitude of the number, or the degree of a polynomial. The basic principle is that each step of the algorithm reduces f inexorably; hence, if f can be reduced only a finite number of times, the algorithm must stop in a finite number of steps.

The quadratic integers are helpful to illustrate the limits of Euclidean domains. Quadratic integers are generalization of the Gaussian integers mentioned above, in which the imaginary unit i is replaced by a number ω. Thus, they have the form u + v ω, where u and v are integers and ω has one of two forms, depending on a parameter D. If D does not equal a multiple of four plus one (such as 5, 17, or -19), then

- ω = √D.

If, however, D does equal a multiple of four plus one, then

- ω = (1 + √D)/2.

The domain of such numbers is Euclidean only for certain values of D. In particular, quadratic integers form a Euclidean domain if and only if D = −11, −7, −3, −2, −1, 2, 3, 5, 6, 7, 11, 13, 17, 19, 21, 29, 33, 37, 41, 57 or 73.[50]

[edit] Fermat's Last Theorem

The Euclidean algorithm has been used to verify Fermat's Last Theorem for specific values of the exponent n. This theorem states that the equation

- xn + yn = zn

has no solutions for any n>2, provided that x, y, z, and n are all integers and that xyz ≠ 0; in other words, none of the variables can be zero. This equation is an example of a nonlinear Diophantine equation.

As with the Pythagorean triples above, this equation can be factored

- (x + y)(x + ωy)(x + ω2y)...(x + ωn−1y) = zn

where ω = e2iπ/n is the nth root of 1, that is,

- ωn = 1

Now assume that the factors on the left-hand side of the equation form a Euclidean domain and can be factored uniquely.

[edit] Noncommutative rings

It is also possible to apply the Euclidean algorithm to noncommutative rings such as the set of Hurwitz quaternions.[51] Since multiplication is not commutative, the right and left divisors must be distinguished. Let α and β represent two numbers from such a ring. Then, they have a common right divisor δ if α = ξδ and β = ηδ for some choice of ξ and η in the ring. Similarly, they have a common left divisor if α = δξ and β = δη for some choice of ξ and η in the ring. The Euclidean algorithm can be defined for either choice of divisor. For example, choosing the right divisors, the first step in finding the GCD(α, β) by the Euclidean algorithm can be written

- ρ0 = α - ψ0β = (ξ - ψ0η)δ

where ψ0 represents the quotient and ρ0 the remainder. This equation shows that any common right divisor of α and β is likewise a common divisor of the remainder ρ0. The analogous equation for the left divisors would be

- ρ0 = α - βψ0 = δ(ξ - ηψ0)

With either choice, the process is repeated as above until the greatest common right or left divisor is identified. As in the Euclidean domain, the "size" of the remainder ρ0 must be strictly smaller than β, and there must be only a finite number of possible sizes for ρ0, so that the algorithm is guaranteed to terminate.

Most of the results for the GCD carry over to noncommutative numbers. For example, Bézout's identity states that the right GCD(α, β) can be expressed as a linear combination of α and β. In other words, there are numbers σ and τ such that

- Γright = σα + τβ

The analogous identity for the left GCD is nearly the same

- Γleft = ασ + βτ

Bézout's identity suffices to show that the numbers can be factored uniquely, as discussed above. It also can be used to solve Diophantine equations and find multiplicative inverses in finite fields.

[edit] Hurwitz quaternions and the four-square theorem

The Euclidean algorithm is useful in proving Lagrange's four-square theorem, by demonstrating the unique factorization of Hurwitz quaternions. Lagrange's theorem states that any natural number can be represented as the sum of four squares

- p = a02 + a12 + a22 + a32

where the four numbers (a0, a1, a2, a3) are integers. This theorem corresponds to Fermat's theorem on sums of two squares, which can likewise be proven using the unique factorization of Gaussian integers, again demonstrated using the Euclidean algorithm.

The quaternions are defined as a set of numbers α

- α = a0 + a1 i + a2 j + a3 k

where the boldfaced numbers i, j, and k have the properties

- i2 = j2 = k2 = ijk = −1

From these definitions, the product ij = k but ji = −k. In other words, multiplication of quaternions is not commutative; the product αβ need not equal βα. The numbers (a0, a1, a2, a3) are real numbers and are called the components of the quaternion. For every quaternion α, a conjugate quaternion ᾶ can be defined

- ᾶ = a0 − a1 i − a2 j − a3 k

The product αᾶ = ᾶα is called the norm N(α) of the quaternion, and is a nonnegative real number.

- N(α) = a02 + a12 + a22 + a32

The norm of a real number (a0, 0, 0, 0) equals the square of the number N = a02.

The Hurwitz quaternions are the analog of integers for quaternions. They consist of all quaternions with integer components and all quaternions with half-integer components. These two sets can be combined into a single formula

- α = ½ A0 (1 + i + j + k ) + A1 i + A2 j + A3 k

where the four numbers (A0, A1, A2, A3) are all integers. The set of quaternions formed from such four integer components form a ring; in particular, the sum or product of any two such Hurwitz quaternions is likewise a Hurwitz quaternion. The greatest common right or left divisor can be found by the Euclidean algorithm as described in the previous section, where the "size" of the remainder ρ is measured by the norm, which is always an integer.

Thanks to Euler's four-square identity, the norm of a product of quaternions αβ equals the norm of α times the norm of β

- N(αβ) = N(α) N(β)

Since any natural number can be factored into powers of primes, the four-square theorem holds for all natural numbers if it is true for all prime numbers. It is true for 2 = 02 + 02 + 12 + 12. To show this for odd prime integer p, assume that p can be factored in the Hurwitz quaternions

- p = α β

The norms of p, α and β are all nonnegative integers such that

- N(p) = p2 = N(αβ) = N(α) N(β)

But the norm has only two factors, both p. Therefore, if p can be factored in Hurwitz quaternions, then p is the sum of four squares

- p = N(α) = a02 + a12 + a22 + a32

Lagrange demonstrated that any odd prime p can divide some number of the form 1 + l2 + m2, where l and m are integers. This latter number can be factored in Hurwitz quaternions

- 1 + l2 + m2 = (1 + l i + m j) (1 − l i − m j)

If p could not be factored in Hurwitz quaternions, it would be a Hurwitz prime number by definition. Then, by unique factorization, p would have to divide either 1 + l i + m j or 1 − l i − m j to form another Hurwitz quaternion. But for p>2, the number

- 1/p ± l/p i ± m/p j

is not a Hurwitz integer. Therefore, every p>2 can be factored in Hurwitz quaternions, and the four-square theorem holds.

[edit] Historical development

|

"[The Euclidean algorithm] is the granddaddy of all algorithms, because it is the oldest nontrivial algorithm that has survived to the present day." |

| Donald Knuth, The Art of Computer Programming, Vol. 2: Seminumerical Algorithms, 2nd edition (1981), p. 318. |

The Euclidean algorithm is the oldest algorithm in the surviving historical record. It appeared in Euclid's Elements around 300 BC, specifically in Book 7 (Propositions 1–2) and Book 10 (Propositions 2–3). In Book 7, the algorithm is formulated for integers (Book 7), whereas in Book 10, it is formulated for real numbers. The latter algorithm is geometrical; the GCD of two lengths a and b corresponds to the greatest length g that is commensurate with both.

The algorithm was probably not discovered by Euclid, who compiled many earlier results in his Elements.[52][53] The mathematician and historian B. L. van der Waerden suggests that Book VII derives from a textbook on number theory written by mathematicians in the school of Pythagoras.[54] The algorithm was likely known by Eudoxus of Cnidus (about 375 BC),[55][56] and Aristotle (about 330 BC) hints at it in his Topics, 158b, 29–35.

Euclid's algorithm was re-invented both in India and in China,[57] primarily to solve Diophantine equations that arise in astronomy and making accurate calendars. In the late fifth century, the Indian mathematician and astronomer Aryabhata described the algorithm as the "pulverizer", and applied it solving linear Diophantine equations.[58] Although a special case of the Chinese remainder theorem was described earlier by Chinese mathematician and astronomer Sun Tzu, the general solution was published by Qin Jiushao in his 1247 book Shushu Jiuzhang (數書九章 Mathematical Treatise in Nine Sections). The algorithm was first described in Europe in the second edition of Bachet's Problèmes plaisants et délectables (1624).[58] In Europe, it was likewise used to solve Diophantine equations and in developing continued fractions. The extended Euclidean algorithm was published by the English mathematician Nicholas Saunderson, who attributed it to Roger Cotes as a method for computing continued fractions efficiently.

In the 19th-century, Carl Friedrich Gauss used the Euclidean algorithm to demonstrate unique factorization of Gaussian integers by 1815 (published 1832), although he did not mention the algorithm in his earlier work, Disquisitiones Arithmeticae (1801), except as a method for continued fractions.[59] Dirichlet seems to have been the first to describe the Euclidean algorithm as the basis for much of number theory. Dirichlet noted that many results of number theory, such as unique factorization, would hold true for any other system of numbers in which the Euclidean algorithm could be applied.[60] Dirichlet's insight likely inspired Richard Dedekind to develop theories for new types of numbers, the algebraic integers, and more generally Euclidean domains. For example, Dedekind was the first to prove Fermat's two-square theorem using the unique factorization of Gaussian integers.[61] However, through Dedekind's work, the importance of the Euclidean algorithm in number theory gradually became eclipsed by the more general theory of ideals.[62]

Other applications of Euclid's algorithm were developed in the 19th century. In 1829, Sturm showed that the algorithm provided an efficient method for counting the number of real roots of polynomials in any given interval.[63] A generalization of this result is known as Sturm's theorem.

The Euclidean algorithm was the first integer relation algorithm developed, that is, the first method for finding integer relations between commensurate real numbers. No new general algorithms were developed until 1979, when the Ferguson-Forcade algorithm was developed by Helaman Ferguson and R.W. Forcade.[64] Since that time, several others have been developed, such as the LLL algorithm, the HJLS algorithm, and the PSLQ algorithm. The PSLQ algorithm has been recognized as one of the top ten algorithms of the 20th century.[65]

[edit] See also

- Binary GCD algorithm

- Lehmer's GCD algorithm

- Extended Euclidean algorithm

- Greatest common divisor of two polynomials

[edit] References

- ^ Stark, p. 16

- ^ Stark, p. 21.

- ^ LeVeque, p. 32.

- ^ Ore, p. 47.

- ^ Leveque, p. 31.

- ^ Schroeder, pp. 21–22.

- ^ Schroeder, p. 19.

- ^ Ogilvy CS, Anderson JT (1966). Excursions in number theory. New York: Oxford University Press. pp. 27–29. LCCN 66-14484.

- ^ Schroeder, p. 22.

- ^ Lovász L, Pelikán J, Vesztergombi K (2003). Discrete Mathematics: Elementary and Beyond. New York: Springer-Verlag. pp. 100–101. ISBN 0-387-95584-4.

- ^ Knuth, p. 320.

- ^ a b Ore, p. 43.

- ^ a b Stewart BM (1964). Theory of Numbers (2nd ed.). New York: Macmillan. pp. 43–44. LCCN 64-10964.

- ^ Leveque, p. 33.

- ^ Koshy T (2002). Elementary Number Theory with Applications. Burlington, MA: Harcourt/Academic Press. pp. 167–169. ISBN 0-12-421171-2.

- ^ Ore, p. 44.

- ^ Ore, p. 44.

- ^ Schroeder, pp. 106–107.

- ^ Schroeder, pp. 108–109.

- ^ Schroeder, pp. 107–109.

- ^ Stillwell, pp. 186–187.

- ^ Schroeder, p. 134.

- ^ Schroeder, pp. 194–195.

- ^ Vinogradov IM (1954). Elements of Number Theory. New York: Dover. pp. 3–13.

- ^ Lamé G (1844). "Note sur la limite du nombre des divisions dans la recherche du plus grand commun diviseur entre deux nombres entiers". Comptes Rendus Acad. Sci. 19: 867–870.

- ^ LeVeque, p. 35.

- ^ Ore, p. 45.

- ^ Knuth, p. 344.

- ^ Knuth, p. 343.

- ^ Mollin, p. 21.

- ^ Knuth, p. 343.

- ^ Mollin, pp. 21–22.

- ^ Knuth, p. 344.

- ^ Knuth, p. 353.

- ^ Knuth, p. 357.

- ^ Porter JW (1975). "On a Theorem of Heilbronn". Mathematika 22: 20–28.

- ^ Knuth DE (1976). Computers and Math. with Applic. 2: 137–139.

- ^ Dixon JD (1970). "The Number of Steps in the Euclidean Algorithm". J. Number Theory 2: 414–422.

- ^ Heilbronn HA (1969). Paul Turán. ed. Number Theory and Analysis. New York: Plenum. pp. 87–96.

- ^ Knuth, p. 354.

- ^ Knuth, p. 355.

- ^ Knuth, p. 355.

- ^ Knuth, p. 356.

- ^ R. Crandall & C. Pomerance. Prime Numbers - A Computational Perspective. Second Edition, Springer 2005.

- ^ N. Möller. On Schönhage's algorithm and subquadratic integer gcd computation, preprint.

- ^ a b Lang S (1984). Algebra (2nd ed.). Menlo Park, CA: Addison-Wesley. pp. 190–194. ISBN 0-201-05487-6.

- ^ Schroeder, pp. 254–259.

- ^ Gauss CF (1832). "Theoria residuorum biquadraticorum". Comm. Soc. Reg. Sci. Gött. Rec. 4.. See also Werke, 2:67–148.

- ^ Stillwell J (2003). Elements of Number Theory. New York: Springer-Verlag. pp. 101–116. ISBN 0-387-95587-9.

- ^ Cohn H (1962). Advanced Number Theory. New York: Dover. pp. 104–110. ISBN 0-486-64023-X.

- ^ Stillwell J (2003). Elements of Number Theory. New York: Springer-Verlag. pp. 151–152. ISBN 0-387-95587-9.

- ^ Weil A (1983). Number Theory. Boston: Birkhäuser. pp. 4–6. ISBN 0-8176-3141-0.

- ^ Jones A (1994). "Greek mathematics to AD 300". Companion encyclopedia of the history and philosophy of the mathematical sciences. New York: Routledge. pp. 46–48. ISBN 0-415-09238-8.

- ^ van der Waerden BL (1954). Science Awakening. translated by Arnold Dresden. Groningen: P. Noordhoff Ltd.. pp. 114–115.

- ^ Knuth, p. 318.

- ^ von Fritz K (1945). Ann. Math. 46: 242–264.

- ^ Stillwell, p. 31.

- ^ a b Tattersall JJ (2005). Elementary number theory in nine chapters. Cambridge: Cambridge University Press. pp. 70. ISBN 978-0-521-85014-8.

- ^ Stillwell, p. 31.

- ^ Stillwell, pp. 31–32; Dirichlet, pp. 29–31.

- ^ Dedekind R (1894). "Supplement XI". in PGL Dirichlet. Vorlesungen über Zahlentheorie.

- ^ Stillwell J (2003). Elements of Number Theory. New York: Springer-Verlag. pp. 41–42. ISBN 0-387-95587-9.

- ^ Sturm C (1829). "Mémoire sur la résolution des équations numériques". Bull. des sciences de Férussac 11.

- ^ Eric W. Weisstein, Integer Relation at MathWorld.

- ^ The Best of the 20th Century: Editors Name Top 10 Algorithms by Barry A. Cipra; SIAM News, Volume 33, Number 4

[edit] Bibliography

- Cormen TH, Leiserson CE, Rivest RL, and Stein C (2001). Introduction to Algorithms (2nd ed.). MIT Press and McGraw–Hill. pp. 856–862 (Section 31.2). ISBN 0-262-03293-7.

- Cox D, Little J, and O'Shea D (1997). Ideals, Varieties, and Algorithms: An Introduction to Computational Algebraic Geometry and Commutative Algebra (2nd ed.). Springer-Verlag. pp. 29–46 (Chapter 1). ISBN 0-387-94680-2.

- Dirichlet PGL (1894). Richard Dedekind. ed. Vorlesungen über Zahlentheorie. Braunschweig: Vieweg.

- Hardy GH, Wright EM (1954). An Introduction to the Theory of Numbers (3rd ed.). Oxford: Clarendon Press. pp. 178–189.

- Kimberling C (1983). "A Visual Euclidean Algorithm". Mathematics Teacher 76: 108–109.

- Knuth D (1997). The Art of Computer Programming, Volume 2: Seminumerical Algorithms (3rd ed.). Addison-Wesley. pp. 333–379 (Sections 4.5.2–4.5.3). ISBN 0-201-89684-2.

- LeVeque WJ (1977). Fundamentals of Number Theory. New York: Dover. pp. 31–46. ISBN 0-486-68906-9.

- Mollin RA (2008). Fundamental Number Theory with Applications (2nd ed.). Boca Raton: Chapman & Hall/CRC. pp. 16–29. ISBN 978-1-4200-6659-3.

- Ore Ø (1948). Number Theory and Its History. New York: McGraw-Hill.

- Schroeder MR. Number Theory in Science and Communication (4th ed.). Springer-Verlag. pp. Chap. 2, pp. 17–25; Chap. 5, pp. 55–56, 81–86; Chap. 6, 87–98; Chap. 16, pp. 186–188; Chap. 24, pp. 253–258. ISBN 0-387-15800-6.

- Stark H (1978). An Introduction to Number Theory. MIT Press. pp. 16–33, 44–50 (Chap. 2); 145–163 (Chap. 5); 257–299 (Chap. 8). ISBN 0-262-69060-8.

- Stillwell J (1997). Numbers and Geometry. New York: Springer-Verlag. pp. 13–21, 30–32, 186–187, 230–233, 267–271. ISBN 0-387-98289-2.

- Uspensky JV, Heaslet MA (1939). Elementary Number Theory. New York: McGraw-Hill.

[edit] External links

- Euclid's Algorithm at cut-the-knot

- Binary Euclid's Algorithm (Java) at cut-the-knot

- Euclid's Game (Java) at cut-the-knot

- Eric W. Weisstein, Euclidean Algorithm at MathWorld.

- Eric W. Weisstein, Lamé's Theorem at MathWorld.

- Euclid's algorithm at PlanetMath.

- Music and Euclid's algorithm

- The Euclidean Algorithm at MathPages

- An animated implementation with arbitrary precision integer arithmetic

- Implementation in Javascript

|

|||||||||||||||||