Binary heap

From Wikipedia, the free encyclopedia

A binary heap is a heap data structure created using a binary tree. It can be seen as a binary tree with two additional constraints:

- The shape property: the tree is a complete binary tree; that is, all levels of the tree, except possibly the last one (deepest) are fully filled, and, if the last level of the tree is not complete, the nodes of that level are filled from left to right.

- The heap property: each node is greater than or equal to each of its children according to some comparison predicate which is fixed for the entire data structure.

“Greater than” means according to whatever comparison function is chosen to sort the heap, not necessarily “greater than” in the mathematical sense (since the quantities are not always numerical). Heaps where the comparison function is mathematical “greater than” are called max-heaps; those where the comparison function is mathematical “less than” are called “min-heaps.” Conventionally, min-heaps are used, since they are readily applicable for use in priority queues.

Note that the ordering of siblings in a heap is not specified by the heap property, so the two children of a parent can be freely interchanged (as long as this does not violate the shape and heap property, compare with treap).

The binary heap is a special case of the d-ary heap in which each node can have d children (rather than just 2).

It is possible to modify the heap structure to allow extraction of both the smallest and largest element in O(lgn) time[1]. To do this the rows alternate between min heap and max heap. The algorithms are roughly the same, but in each step must consider the alternating rows with alternating comparisons. The performance is roughly the same as a normal single direction heap. This idea can be generalised to a min-max-median heap.

Contents |

[edit] Heap operations

[edit] Adding to the heap

If we have a heap, and we add an element, we can perform an operation known as up-heap, bubble-up, percolate-up, sift-up, or heapify-up in order to restore the heap property. We can do this in O(log n) time, using a binary heap, by following this algorithm:

- Add the element on the bottom level of the heap.

- Compare the added element with its parent; if they are in the correct order, stop.

- If not, swap the element with its parent and return to the previous step.

We do this at maximum for each level in the tree — the height of the tree, which is O(log n). However, since approximately 50% of the elements are leaves and 75% are in the bottom two levels, it is likely that the new element to be inserted will only move a few levels upwards to maintain the heap. Thus, binary heaps support insertion in average constant time, O(1).

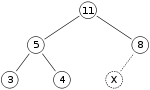

Say we have a max-heap

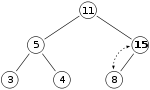

and we want to add the number 15 to the heap. We first place the 15 in the position marked by the X. However the heap property is violated since 15 is greater than 8, so we need to swap the 15 and the 8. So, we have the heap looking as follows after the first swap:

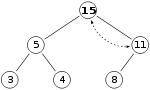

However the heap property is still violated since 15 is greater than 11, so we need to swap again:

which is a valid max-heap.

[edit] Deleting the root from the heap

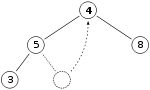

The procedure for deleting the root from the heap — effectively extracting the maximum element in a max-heap or the minimum element in a min-heap — starts by replacing it with the last element on the last level. So, if we have the same max-heap as before, we remove the 11 and replace it with the 4.

Now the heap property is violated since 8 is greater than 4. The operation that restores the property is called down-heap, bubble-down, percolate-down, sift-down, or heapify-down. In this case, swapping the two elements 4 and 8, is enough to restore the heap property and we need not swap elements further:

In general, the downward-moving node is swapped with its larger child in a max-heap (in a min-heap it would be swapped with its smaller child), until it satisfies the heap property in its new position. Note that the down-heap operation (without the preceding swap) can be used in general to modify the value of the root, even when an element is being deleted.

[edit] Building a heap

A heap could be built by successive insertions. This approach requires O(nlgn) time because each insertion takes O(lgn) time and there are n elements. However this is not the optimal method. The optimal method starts by randomly putting the elements on a binary tree (which could be represented by an array, see below). Then starting from the lowest level and moving upwards until the heap property is restored by shifting the root of the subtree downward as in the deletion algorithm. More specifically if all the subtrees starting at some height h (measured from the bottom) have already been "heapified", the trees at depth h+1 can be heapified by sending their root down, along the path of maximum children. This process takes O(h) operations (swaps). In this method most of the heapification takes place in the lower levels. The number of nodes at height h is  . Therefore, the cost of heapifying all subtrees is:

. Therefore, the cost of heapifying all subtrees is:

[edit] Heap implementation

It is perfectly acceptable to use a traditional binary tree data structure to implement a binary heap. There is an issue with finding the adjacent element on the last level on the binary heap when adding an element which can be resolved algorithmically or by adding extra data to the nodes, called “threading” the tree — that is, instead of merely storing references to the children, we store the inorder successor of the node as well.

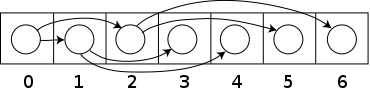

However, a more common approach, and an approach aligned with the theory behind heaps, is to store the heap in an array. Any binary tree can be stored in an array, but because a heap is always an almost complete binary tree, it can be stored compactly. No space is required for pointers; instead, the parent and children of each node can be found by simple arithmetic on array indices. Details depend on the root position (which in turn may depend on constraints of a programming language used for implementation). If the tree root item has index 0 (n tree elements are a[0] .. a[n−1]), then for each index i, element a[i] has children a[2i+1] and a[2i+2], and the parent a[floor((i−1)/2)], as shown in the figure. If the root is a[1] (tree elements are a[1] .. a[n]), then for each index i, element a[i] has children a[2i] and a[2i+1], and the parent a[floor(i/2)]. This is a simple example of an implicit data structure.

This approach is particularly useful in the heapsort algorithm, where it allows the space in the input array to be reused to store the heap (i.e. the algorithm is in-place). However it requires allocating the array before filling it, which makes this method not that useful in priority queues implementation, where the number of tasks (heap elements) is not necessarily known in advance.

The upheap/downheap operations can be stated then in terms of an array as follows: suppose that the heap property holds for the indices b, b+1, ..., e. The sift-down function extends the heap property to b-1, b, b+1, ..., e. Only index i = b-1 can violate the heap property. Let j be the index of the largest child of a[i] (for a max-heap, or the smallest child for a min-heap) within the range b, ..., e. (If no such index exists because 2i > e then the heap property holds for the newly extended range and nothing needs to be done.) By swapping the values a[i] and a[j] the heap property for position i is established. At this point, the only problem is that the heap property might not hold for index j. The sift-down function is applied tail-recursively to index j until the heap property is established for all elements.

The sift-down function is fast. In each step it only needs two comparisons and one swap. The index value where it is working doubles in each iteration, so that at most log2 e steps are required.

The merge operation in a binary heap takes Θ(n) for equal-sized heaps. The best you can do is (in case of array implementation) simply concatenating the two heap arrays and build a heap of the result.[1] When merging is a common task, a different heap implementation is recommended, such as binomial heaps, which can be merged in O(log n).

[edit] See also

[edit] Notes

- ^ Atkinson, M.D., J.-R. Sack, N. Santoro, and T. Strothotte (1986-10-01). "Min-max heaps and generalized priority queues.". Programming techniques and Data structures. Comm. ACM, 29(10): 996-1000,. http://cg.scs.carleton.ca/~morin/teaching/5408/refs/minmax.pdf.