Discrete Fourier transform

From Wikipedia, the free encyclopedia

| It has been suggested that Arithmetic complexity of the discrete Fourier transform be merged into this article or section. (Discuss) |

| This article includes a list of references or external links, but its sources remain unclear because it has insufficient inline citations. Please help to improve this article by introducing more precise citations where appropriate. (January 2009) |

| Fourier transforms |

|---|

| Continuous Fourier transform |

| Fourier series |

| Discrete Fourier transform |

| Discrete-time Fourier transform |

|

|

In mathematics, the discrete Fourier transform (DFT) is one of the specific forms of Fourier analysis. It transforms one function into another, which is called the frequency domain representation, or simply the DFT, of the original function (which is often a function in the time domain). But the DFT requires an input function that is discrete and whose non-zero values have a limited (finite) duration. Such inputs are often created by sampling a continuous function, like a person's voice. And unlike the discrete-time Fourier transform (DTFT), it only evaluates enough frequency components to reconstruct the finite segment that was analyzed. Its inverse transform cannot reproduce the entire time domain, unless the input happens to be periodic (forever). Therefore it is often said that the DFT is a transform for Fourier analysis of finite-domain discrete-time functions. The sinusoidal basis functions of the decomposition have the same properties.

Since the input function is a finite sequence of real or complex numbers, the DFT is ideal for processing information stored in computers. In particular, the DFT is widely employed in signal processing and related fields to analyze the frequencies contained in a sampled signal, to solve partial differential equations, and to perform other operations such as convolutions. The DFT can be computed efficiently in practice using a fast Fourier transform (FFT) algorithm.

Since FFT algorithms are so commonly employed to compute the DFT, the two terms are often used interchangeably in colloquial settings, although there is a clear distinction: "DFT" refers to a mathematical transformation, regardless of how it is computed, while "FFT" refers to any one of several efficient algorithms for the DFT. This distinction is further blurred, however, by the synonym finite Fourier transform for the DFT, which apparently predates the term "fast Fourier transform" (Cooley et al., 1969) but has the same initialism.

[edit] Definition

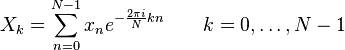

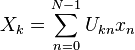

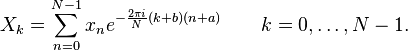

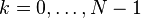

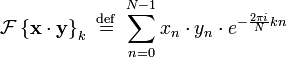

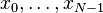

The sequence of N complex numbers x0, ..., xN−1 is transformed into the sequence of N complex numbers X0, ..., XN−1 by the DFT according to the formula:

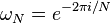

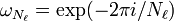

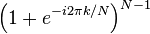

where  is a primitive N'th root of unity.

is a primitive N'th root of unity.

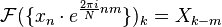

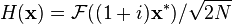

The transform is sometimes denoted by the symbol  , as in

, as in  or

or  or

or  .

.

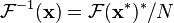

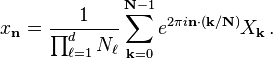

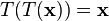

The inverse discrete Fourier transform (IDFT) is given by

A simple description of these equations is that the complex numbers Xk represent the amplitude and phase of the different sinusoidal components of the input "signal" xn. The DFT computes the Xk from the xn, while the IDFT shows how to compute the xn as a sum of sinusoidal components  with frequency k / N cycles per sample. By writing the equations in this form, we are making extensive use of Euler's formula to express sinusoids in terms of complex exponentials, which are much easier to manipulate. (In the same way, by writing Xk in polar form, we immediately obtain the sinusoid amplitude from | Xk | and the phase from the complex argument.) An important subtlety of this representation, aliasing, is discussed below.

with frequency k / N cycles per sample. By writing the equations in this form, we are making extensive use of Euler's formula to express sinusoids in terms of complex exponentials, which are much easier to manipulate. (In the same way, by writing Xk in polar form, we immediately obtain the sinusoid amplitude from | Xk | and the phase from the complex argument.) An important subtlety of this representation, aliasing, is discussed below.

Note that the normalization factor multiplying the DFT and IDFT (here 1 and 1/N) and the signs of the exponents are merely conventions, and differ in some treatments. The only requirements of these conventions are that the DFT and IDFT have opposite-sign exponents and that the product of their normalization factors be 1/N. A normalization of  for both the DFT and IDFT makes the transforms unitary, which has some theoretical advantages, but it is often more practical in numerical computation to perform the scaling all at once as above (and a unit scaling can be convenient in other ways).

for both the DFT and IDFT makes the transforms unitary, which has some theoretical advantages, but it is often more practical in numerical computation to perform the scaling all at once as above (and a unit scaling can be convenient in other ways).

(The convention of a negative sign in the exponent is often convenient because it means that Xk is the amplitude of a "positive frequency" 2πk / N. Equivalently, the DFT is often thought of as a matched filter: when looking for a frequency of +1, one correlates the incoming signal with a frequency of −1.)

In the following discussion the terms "sequence" and "vector" will be considered interchangeable.

[edit] Properties

[edit] Completeness

The discrete Fourier transform is an invertible, linear transformation

with C denoting the set of complex numbers. In other words, for any N > 0, an N-dimensional complex vector has a DFT and an IDFT which are in turn N-dimensional complex vectors.

[edit] Orthogonality

The vectors  form an orthogonal basis over the set of N-dimensional complex vectors:

form an orthogonal basis over the set of N-dimensional complex vectors:

where  is the Kronecker delta. This orthogonality condition can be used to derive the formula for the IDFT from the definition of the DFT, and is equivalent to the unitarity property below.

is the Kronecker delta. This orthogonality condition can be used to derive the formula for the IDFT from the definition of the DFT, and is equivalent to the unitarity property below.

[edit] The Plancherel theorem and Parseval's theorem

If Xk and Yk are the DFTs of xn and yn respectively then the Plancherel theorem states:

where the star denotes complex conjugation. Parseval's theorem is a special case of the Plancherel theorem and states:

These theorems are also equivalent to the unitary condition below.

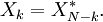

[edit] Periodicity

If the expression that defines the DFT is evaluated for all integers k instead of just for  , then the resulting infinite sequence is a periodic extension of the DFT, periodic with period N.

, then the resulting infinite sequence is a periodic extension of the DFT, periodic with period N.

The periodicity can be shown directly from the definition:

where we have used the fact that e − 2πi = 1. In the same way it can be shown that the IDFT formula leads to a periodic extension.

[edit] The shift theorem

Multiplying xn by a linear phase  for some integer m corresponds to a circular shift of the output Xk: Xk is replaced by Xk − m, where the subscript is interpreted modulo N (i.e., periodically). Similarly, a circular shift of the input xn corresponds to multiplying the output Xk by a linear phase. Mathematically, if {xn} represents the vector x then

for some integer m corresponds to a circular shift of the output Xk: Xk is replaced by Xk − m, where the subscript is interpreted modulo N (i.e., periodically). Similarly, a circular shift of the input xn corresponds to multiplying the output Xk by a linear phase. Mathematically, if {xn} represents the vector x then

- if

- then

- and

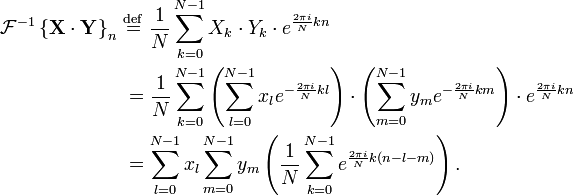

[edit] Circular convolution theorem and cross-correlation theorem

The convolution theorem for the continuous and discrete time Fourier transforms indicates that a convolution of two infinite sequences can be obtained as the inverse transform of the product of the individual transforms. With sequences and transforms of length N, a circularity arises:

The quantity in parentheses is 0 for all values of m except those of the form n − l − pN, where p is any integer. At those values, it is 1. It can therefore be replaced by an infinite sum of Kronecker delta functions, and we continue accordingly. Note that we can also extend the limits of m to infinity, with the understanding that the x and y sequences are defined as 0 outside [0,N-1]:

which is the convolution of the  sequence with a periodically extended

sequence with a periodically extended  sequence defined by:

sequence defined by:

Similarly, it can be shown that:

which is the cross-correlation of  and

and

A direct evaluation of the convolution or correlation summation (above) requires O(N2) operations for an output sequence of length N. An indirect method, using transforms, can take advantage of the O(NlogN) efficiency of the fast Fourier transform (FFT) to achieve much better performance. Furthermore, convolutions can be used to efficiently compute DFTs via Rader's FFT algorithm and Bluestein's FFT algorithm.

Methods have also been developed to use circular convolution as part of an efficient process that achieves normal (non-circular) convolution with an  or

or  sequence potentially much longer than the practical transform size (N). Two such methods are called overlap-save and overlap-add[1].

sequence potentially much longer than the practical transform size (N). Two such methods are called overlap-save and overlap-add[1].

[edit] Convolution theorem duality

It can also be shown that:

which is the circular convolution of

which is the circular convolution of  and

and  .

.

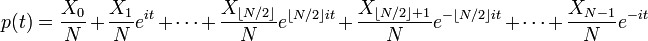

[edit] Trigonometric interpolation polynomial

The trigonometric interpolation polynomial

for N even ,

for N even , for N odd,

for N odd,

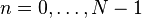

where the coefficients Xk /N are given by the DFT of xn above, satisfies the interpolation property p(2πn / N) = xn for  .

.

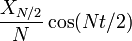

For even N, notice that the Nyquist component  is handled specially.

is handled specially.

This interpolation is not unique: aliasing implies that one could add N to any of the complex-sinusoid frequencies (e.g. changing e − it to ei(N − 1)t ) without changing the interpolation property, but giving different values in between the xn points. The choice above, however, is typical because it has two useful properties. First, it consists of sinusoids whose frequencies have the smallest possible magnitudes, and therefore minimizes the mean-square slope  of the interpolating function. Second, if the xn are real numbers, then p(t) is real as well.

of the interpolating function. Second, if the xn are real numbers, then p(t) is real as well.

In contrast, the most obvious trigonometric interpolation polynomial is the one in which the frequencies range from 0 to N − 1 (instead of roughly − N / 2 to + N / 2 as above), similar to the inverse DFT formula. This interpolation does not minimize the slope, and is not generally real-valued for real xn; its use is a common mistake.

[edit] The unitary DFT

Another way of looking at the DFT is to note that in the above discussion, the DFT can be expressed as a Vandermonde matrix:

where

is a primitive Nth root of unity. The inverse transform is then given by the inverse of the above matrix:

With unitary normalization constants  , the DFT becomes a unitary transformation, defined by a unitary matrix:

, the DFT becomes a unitary transformation, defined by a unitary matrix:

where det() is the determinant function. The determinant is the product of the eigenvalues, which are always  or

or  as described below. In a real vector space, a unitary transformation can be thought of as simply a rigid rotation of the coordinate system, and all of the properties of a rigid rotation can be found in the unitary DFT.

as described below. In a real vector space, a unitary transformation can be thought of as simply a rigid rotation of the coordinate system, and all of the properties of a rigid rotation can be found in the unitary DFT.

The orthogonality of the DFT is now expressed as an orthonormality condition (which arises in many areas of mathematics as described in root of unity):

If  is defined as the unitary DFT of the vector

is defined as the unitary DFT of the vector  then

then

and the Plancherel theorem is expressed as:

If we view the DFT as just a coordinate transformation which simply specifies the components of a vector in a new coordinate system, then the above is just the statement that the dot product of two vectors is preserved under a unitary DFT transformation. For the special case  , this implies that the length of a vector is preserved as well—this is just Parseval's theorem:

, this implies that the length of a vector is preserved as well—this is just Parseval's theorem:

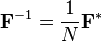

[edit] Expressing the inverse DFT in terms of the DFT

A useful property of the DFT is that the inverse DFT can be easily expressed in terms of the (forward) DFT, via several well-known "tricks". (For example, in computations, it is often convenient to only implement a fast Fourier transform corresponding to one transform direction and then to get the other transform direction from the first.)

First, we can compute the inverse DFT by reversing the inputs:

(As usual, the subscripts are interpreted modulo N; thus, for n = 0, we have xN − 0 = x0.)

Second, one can also conjugate the inputs and outputs:

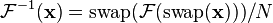

Third, a variant of this conjugation trick, which is sometimes preferable because it requires no modification of the data values, involves swapping real and imaginary parts (which can be done on a computer simply by modifying pointers). Define swap(xn) as xn with its real and imaginary parts swapped—that is, if xn = a + bi then swap(xn) is b + ai. Equivalently, swap(xn) equals  . Then

. Then

That is, the inverse transform is the same as the forward transform with the real and imaginary parts swapped for both input and output, up to a normalization (Duhamel et al., 1988).

The conjugation trick can also be used to define a new transform, closely related to the DFT, that is involutary—that is, which is its own inverse. In particular,  is clearly its own inverse:

is clearly its own inverse:  . A closely related involutary transformation (by a factor of (1+i) /√2) is

. A closely related involutary transformation (by a factor of (1+i) /√2) is  , since the (1 + i) factors in

, since the (1 + i) factors in  cancel the 2. For real inputs

cancel the 2. For real inputs  , the real part of

, the real part of  is none other than the discrete Hartley transform, which is also involutary.

is none other than the discrete Hartley transform, which is also involutary.

[edit] Eigenvalues and eigenvectors

The eigenvalues of the DFT matrix are simple and well-known, whereas the eigenvectors are complicated, not unique, and are the subject of ongoing research.

Consider the unitary form  defined above for the DFT of length N, where

defined above for the DFT of length N, where  . This matrix satisfies the equation:

. This matrix satisfies the equation:

This can be seen from the inverse properties above: operating  twice gives the original data in reverse order, so operating

twice gives the original data in reverse order, so operating  four times gives back the original data and is thus the identity matrix. This means that the eigenvalues λ satisfy a characteristic equation:

four times gives back the original data and is thus the identity matrix. This means that the eigenvalues λ satisfy a characteristic equation:

- λ4 = 1.

Therefore, the eigenvalues of  are the fourth roots of unity: λ is +1, −1, +i, or −i.

are the fourth roots of unity: λ is +1, −1, +i, or −i.

Since there are only four distinct eigenvalues for this  matrix, they have some multiplicity. The multiplicity gives the number of linearly independent eigenvectors corresponding to each eigenvalue. (Note that there are N independent eigenvectors; a unitary matrix is never defective.)

matrix, they have some multiplicity. The multiplicity gives the number of linearly independent eigenvectors corresponding to each eigenvalue. (Note that there are N independent eigenvectors; a unitary matrix is never defective.)

The problem of their multiplicity was solved by McClellan and Parks (1972), although it was later shown to have been equivalent to a problem solved by Gauss (Dickinson and Steiglitz, 1982). The multiplicity depends on the value of N modulo 4, and is given by the following table:

| size N | λ = +1 | λ = −1 | λ = -i | λ = +i |

|---|---|---|---|---|

| 4m | m + 1 | m | m | m − 1 |

| 4m + 1 | m + 1 | m | m | m |

| 4m + 2 | m + 1 | m + 1 | m | m |

| 4m + 3 | m + 1 | m + 1 | m + 1 | m |

Unfortunately, no simple analytical formula for the eigenvectors is known. Moreover, the eigenvectors are not unique because any linear combination of eigenvectors for the same eigenvalue is also an eigenvector for that eigenvalue. Various researchers have proposed different choices of eigenvectors, selected to satisfy useful properties like orthogonality and to have "simple" forms (e.g., McClellan and Parks, 1972; Dickinson and Steiglitz, 1982; Grünbaum, 1982; Atakishiyev and Wolf, 1997; Candan et al., 2000; Hanna et al., 2004; Gurevich and Hadani, 2008).

The choice of eigenvectors of the DFT matrix has become important in recent years in order to define a discrete analogue of the fractional Fourier transform—the DFT matrix can be taken to fractional powers by exponentiating the eigenvalues (e.g., Rubio and Santhanam, 2005). For the continuous Fourier transform, the natural orthogonal eigenfunctions are the Hermite functions, so various discrete analogues of these have been employed as the eigenvectors of the DFT, such as the Kravchuk polynomials (Atakishiyev and Wolf, 1997). The "best" choice of eigenvectors to define a fractional discrete Fourier transform remains an open question, however.

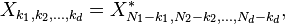

[edit] The real-input DFT

If  are real numbers, as they often are in practical applications, then the DFT obeys the symmetry:

are real numbers, as they often are in practical applications, then the DFT obeys the symmetry:

The star denotes complex conjugation. The subscripts are interpreted modulo N.

Therefore, the DFT output for real inputs is half redundant, and one obtains the complete information by only looking at roughly half of the outputs  . In this case, the "DC" element X0 is purely real, and for even N the "Nyquist" element XN / 2 is also real, so there are exactly N non-redundant real numbers in the first half + Nyquist element of the complex output X.

. In this case, the "DC" element X0 is purely real, and for even N the "Nyquist" element XN / 2 is also real, so there are exactly N non-redundant real numbers in the first half + Nyquist element of the complex output X.

Using Euler's formula, the interpolating trigonometric polynomial can then be interpreted as a sum of sine and cosine functions.

[edit] Generalized/shifted DFT

It is possible to shift the transform sampling in time and/or frequency domain by some real shifts a and b, respectively. This is sometimes known as a generalized DFT (or GDFT), also called the shifted DFT or offset DFT, and has analogous properties to the ordinary DFT:

Most often, shifts of 1 / 2 (half a sample) are used. While the ordinary DFT corresponds to a periodic signal in both time and frequency domains, a = 1 / 2 produces a signal that is anti-periodic in frequency domain (Xk + N = − Xk) and vice-versa for b = 1 / 2. Thus, the specific case of a = b = 1 / 2 is known as an odd-time odd-frequency discrete Fourier transform (or O2 DFT). Such shifted transforms are most often used for symmetric data, to represent different boundary symmetries, and for real-symmetric data they correspond to different forms of the discrete cosine and sine transforms.

Another interesting choice is a = b = − (N − 1) / 2, which is called the centered DFT (or CDFT). The centered DFT has the useful property that, when N is a multiple of four, all four of its eigenvalues (see above) have equal multiplicities (Rubio and Santhanam, 2005).

The discrete Fourier transform can be viewed as a special case of the z-transform, evaluated on the unit circle in the complex plane; more general z-transforms correspond to complex shifts a and b above.

[edit] Multidimensional DFT

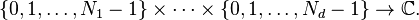

The ordinary DFT transforms a one-dimensional sequence or array) xn that is a function of exactly one discrete variable n. The multidimensional DFT of a multidimensional array  that is a function of d discrete variables

that is a function of d discrete variables  for

for  in

in  is defined by:

is defined by:

where  as above and the d output indices run from

as above and the d output indices run from  . This is more compactly expressed in vector notation, where we define

. This is more compactly expressed in vector notation, where we define  and

and  as d-dimensional vectors of indices from 0 to

as d-dimensional vectors of indices from 0 to  , which we define as

, which we define as  :

:

where the division  is defined as

is defined as  to be performed element-wise, and the sum denotes the set of nested summations above.

to be performed element-wise, and the sum denotes the set of nested summations above.

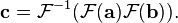

The inverse of the multi-dimensional DFT is, analogous to the one-dimensional case, given by:

As the one-dimensional DFT expresses the input xn as a superposition of sinusoids, the multidimensional DFT expresses the input as a superposition of plane waves, or sinusoids. Direction of oscillation in space is  . The amplitudes are

. The amplitudes are  . This decomposition is of great importance for everything from digital image processing (two-dimensional) to solving partial differential equations in three dimensions (three-dimensional). The solution is broken up into plane waves.

. This decomposition is of great importance for everything from digital image processing (two-dimensional) to solving partial differential equations in three dimensions (three-dimensional). The solution is broken up into plane waves.

The multidimensional DFT can be computed by the composition of a sequence of one-dimensional DFTs along each dimension. In the two-dimensional case  the N1 independent DFTs of the rows (i.e., along n2) are computed first to form a new array

the N1 independent DFTs of the rows (i.e., along n2) are computed first to form a new array  . Then the N2 independent DFTs of y along the columns (along n1) are computed to form the final result

. Then the N2 independent DFTs of y along the columns (along n1) are computed to form the final result  . Alternatively the columns can be computed first and then the rows. The order is immaterial because the nested summations above commute.

. Alternatively the columns can be computed first and then the rows. The order is immaterial because the nested summations above commute.

An algorithm to compute a one-dimensional DFT is thus sufficient to efficiently compute a multidimensional DFT. This approach is known as the row-column algorithm. There are also intrinsically multidimensional FFT algorithms.

[edit] The real-input multidimensional DFT

For input data  consisting of real numbers, the DFT outputs have a conjugate symmetry similar to the one-dimensional case above:

consisting of real numbers, the DFT outputs have a conjugate symmetry similar to the one-dimensional case above:

where the star again denotes complex conjugation and the  -th subscript is again interpreted modulo

-th subscript is again interpreted modulo  (for

(for  ).

).

[edit] Applications

The DFT has seen wide usage across a large number of fields; we only sketch a few examples below (see also the references at the end). All applications of the DFT depend crucially on the availability of a fast algorithm to compute discrete Fourier transforms and their inverses, a fast Fourier transform.

[edit] Spectral analysis

When the DFT is used for spectral analysis, the  sequence usually represents a finite set of uniformly-spaced time-samples of some signal

sequence usually represents a finite set of uniformly-spaced time-samples of some signal  , where t represents time. The conversion from continuous time to samples (discrete-time) changes the underlying Fourier transform of x(t) into a discrete-time Fourier transform (DTFT), which generally entails a type of distortion called aliasing. Choice of an appropriate sample-rate (see Nyquist frequency) is the key to minimizing that distortion. Similarly, the conversion from a very long (or infinite) sequence to a manageable size entails a type of distortion called leakage, which is manifested as a loss of detail (aka resolution) in the DTFT. Choice of an appropriate sub-sequence length is the primary key to minimizing that effect. When the available data (and time to process it) is more than the amount needed to attain the desired frequency resolution, a standard technique is to perform multiple DFTs, for example to create a spectrogram. If the desired result is a power spectrum and noise or randomness is present in the data, averaging the magnitude components of the multiple DFTs is a useful procedure to reduce the variance of the spectrum (also called a periodogram in this context); two examples of such techniques are the Welch method and the Bartlett method.

, where t represents time. The conversion from continuous time to samples (discrete-time) changes the underlying Fourier transform of x(t) into a discrete-time Fourier transform (DTFT), which generally entails a type of distortion called aliasing. Choice of an appropriate sample-rate (see Nyquist frequency) is the key to minimizing that distortion. Similarly, the conversion from a very long (or infinite) sequence to a manageable size entails a type of distortion called leakage, which is manifested as a loss of detail (aka resolution) in the DTFT. Choice of an appropriate sub-sequence length is the primary key to minimizing that effect. When the available data (and time to process it) is more than the amount needed to attain the desired frequency resolution, a standard technique is to perform multiple DFTs, for example to create a spectrogram. If the desired result is a power spectrum and noise or randomness is present in the data, averaging the magnitude components of the multiple DFTs is a useful procedure to reduce the variance of the spectrum (also called a periodogram in this context); two examples of such techniques are the Welch method and the Bartlett method.

A final source of distortion (or perhaps illusion) is the DFT itself, because it is just a discrete sampling of the DTFT, which is a function of a continuous frequency domain. That can be mitigated by increasing the resolution of the DFT. That procedure is illustrated in the discrete-time Fourier transform article.

- The procedure is sometimes referred to as zero-padding, which is a particular implementation used in conjunction with the fast Fourier transform (FFT) algorithm. The inefficiency of performing multiplications and additions with zero-valued "samples" is more than offset by the inherent efficiency of the FFT.

- As already noted, leakage imposes a limit on the inherent resolution of the DTFT. So there is a practical limit to the benefit that can be obtained from a fine-grained DFT.

[edit] Data compression

The field of digital signal processing relies heavily on operations in the frequency domain (i.e. on the Fourier transform). For example, several lossy image and sound compression methods employ the discrete Fourier transform: the signal is cut into short segments, each is transformed, and then the Fourier coefficients of high frequencies, which are assumed to be unnoticeable, are discarded. The decompressor computes the inverse transform based on this reduced number of Fourier coefficients. (Compression applications often use a specialized form of the DFT, the discrete cosine transform or sometimes the modified discrete cosine transform.)

[edit] Partial differential equations

Discrete Fourier transforms are often used to solve partial differential equations, where again the DFT is used as an approximation for the Fourier series (which is recovered in the limit of infinite N). The advantage of this approach is that it expands the signal in complex exponentials einx, which are eigenfunctions of differentiation: d/dx einx = in einx. Thus, in the Fourier representation, differentiation is simple—we just multiply by i n. A linear differential equation with constant coefficients is transformed into an easily solvable algebraic equation. One then uses the inverse DFT to transform the result back into the ordinary spatial representation. Such an approach is called a spectral method.

[edit] Polynomial multiplication

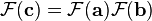

Suppose we wish to compute the polynomial product c(x) = a(x) · b(x). The ordinary product expression for the coefficients of c involves a linear (acyclic) convolution, where indices do not "wrap around." This can be rewritten as a cyclic convolution by taking the coefficient vectors for a(x) and b(x) with constant term first, then appending zeros so that the resultant coefficient vectors a and b have dimension d > deg(a(x)) + deg(b(x)). Then,

Where c is the vector of coefficients for c(x), and the convolution operator  is defined so

is defined so

But convolution becomes multiplication under the DFT:

Here the vector product is taken elementwise. Thus the coefficients of the product polynomial c(x) are just the terms 0, ..., deg(a(x)) + deg(b(x)) of the coefficient vector

With a Fast Fourier transform, the resulting algorithm takes O (N log N) arithmetic operations. Due to its simplicity and speed, the Cooley-Tukey FFT algorithm, which is limited to composite sizes, is often chosen for the transform operation. In this case, d should be chosen as the smallest integer greater than the sum of the input polynomial degrees that is factorizable into small prime factors (e.g. 2, 3, and 5, depending upon the FFT implementation).

[edit] Multiplication of large integers

The fastest known algorithms for the multiplication of very large integers use the polynomial multiplication method outlined above. Integers can be treated as the value of a polynomial evaluated specifically at the number base, with the coefficients of the polynomial corresponding to the digits in that base. After polynomial multiplication, a relatively low-complexity carry-propagation step completes the multiplication.

[edit] Some discrete Fourier transform pairs

|

|

Note |

|---|---|---|

|

|

Shift theorem |

|

|

|

|

|

Real DFT |

|

|

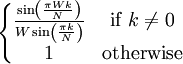

from the geometric progression formula |

|

|

from the binomial theorem |

|

|

xn is a rectangular window function of W points centered on x0, where W is an odd integer, and Xk is a sinc-like function |

[edit] Derivation as Fourier series

The DFT can be derived as a truncation of the Fourier series of a periodic sequence of impulses.

[edit] Generalizations

Many of the properties of the DFT only depend on the fact that  is a primitive root of unity, sometimes denoted ωN or WN (so that

is a primitive root of unity, sometimes denoted ωN or WN (so that  ). Such properties include the completeness, orthogonality, Plancherel/Parseval, periodicity, shift, convolution, and unitarity properties above, as well as many FFT algorithms. For this reason, the discrete Fourier transform can be defined by using roots of unity in fields other than the complex numbers; for more information, see discrete Fourier transform (general).

). Such properties include the completeness, orthogonality, Plancherel/Parseval, periodicity, shift, convolution, and unitarity properties above, as well as many FFT algorithms. For this reason, the discrete Fourier transform can be defined by using roots of unity in fields other than the complex numbers; for more information, see discrete Fourier transform (general).

The standard DFT acts on a sequence x0, x1, …, xN−1 of complex numbers, which can be viewed as a function {0, 1, …, N − 1} → C. The multidimensional DFT acts on multidimensional sequences, which can be viewed as functions

This suggests the generalization to Fourier transforms on arbitrary finite groups, which act on functions G → C where G is a finite group. In this framework, the standard DFT is seen as the Fourier transform on a cyclic group, while the multidimensional DFT is a Fourier transform on a direct sum of cyclic groups.

[edit] See also

[edit] Notes

- ^ T. G. Stockham, Jr., "High-speed convolution and correlation," in 1966 Proc. AFIPS Spring Joint Computing Conf. Reprinted in Digital Signal Processing, L. R. Rabiner and C. M. Rader, editors, New York: IEEE Press, 1972.

[edit] References

- Brigham, E. Oran (1988). The fast Fourier transform and its applications. Englewood Cliffs, N.J.: Prentice Hall. ISBN 0-13-307505-2.

- Oppenheim, Alan V.; Schafer, R. W.; and Buck, J. R. (1999). Discrete-time signal processing. Upper Saddle River, N.J.: Prentice Hall. ISBN 0-13-754920-2.

- Smith, Steven W. (1999). "Chapter 8: The Discrete Fourier Transform". The Scientist and Engineer's Guide to Digital Signal Processing (Second Edition ed.). San Diego, Calif.: California Technical Publishing. ISBN 0-9660176-3-3. http://www.dspguide.com/ch8/1.htm.

- Cormen, Thomas H.; Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein (2001). "Chapter 30: Polynomials and the FFT". Introduction to Algorithms (Second Edition ed.). MIT Press and McGraw-Hill. pp. pp.822–848. ISBN 0-262-03293-7. esp. section 30.2: The DFT and FFT, pp.830–838.

- P. Duhamel, B. Piron, and J. M. Etcheto (1988). "On computing the inverse DFT". IEEE Trans. Acoust., Speech and Sig. Processing 36 (2): 285–286. doi:.

- J. H. McClellan and T. W. Parks (1972). "Eigenvalues and eigenvectors of the discrete Fourier transformation". IEEE Trans. Audio Electroacoust. 20 (1): 66–74. doi:.

- Bradley W. Dickinson and Kenneth Steiglitz (1982). "Eigenvectors and functions of the discrete Fourier transform". IEEE Trans. Acoust., Speech and Sig. Processing 30 (1): 25–31. doi:. (Note that this paper has an apparent typo in its table of the eigenvalue multiplicities: the +i/−i columns are interchanged. The correct table can be found in McClellan and Parks, 1972, and is easily confirmed numerically.)

- F. A. Grünbaum (1982). "The eigenvectors of the discrete Fourier transform". J. Math. Anal. Appl. 88 (2): 355–363. doi:.

- Natig M. Atakishiyev and Kurt Bernardo Wolf (1997). "Fractional Fourier-Kravchuk transform". J. Opt. Soc. Am. A 14 (7): 1467–1477. doi:.

- C. Candan, M. A. Kutay and H. M.Ozaktas (2000). "The discrete fractional Fourier transform". IEEE Trans. On Signal Processing 48 (5): 1329–1337. doi:.

- Magdy Tawfik Hanna, Nabila Philip Attalla Seif, and Waleed Abd El Maguid Ahmed (2004). "Hermite-Gaussian-like eigenvectors of the discrete Fourier transform matrix based on the singular-value decomposition of its orthogonal projection matrices". IEEE Trans. Circ. Syst. I 51 (11): 2245–2254. doi:.

- Shamgar Gurevich and Ronny Hadani (2008). "On the diagonalization of the discrete Fourier transform". Applied and Computational Harmonic Analysis. accepted for publication, arΧiv:0808.3281.

- Shamgar Gurevich, Ronny Hadani and Nir Sochen (2008). "The finite harmonic oscillator and its applications to sequences, communication and radar". IEEE Transactions on Information Theory, vol. 54, no. 9, September 2008. published arΧiv:0808.1495.

- Juan G. Vargas-Rubio and Balu Santhanam (2005). "On the multiangle centered discrete fractional Fourier transform". IEEE Sig. Proc. Lett. 12 (4): 273–276. doi:.

- J. Cooley, P. Lewis, and P. Welch (1969). "The finite Fourier transform". IEEE Trans. Audio Electroacoustics 17 (2): 77–85. doi:.

[edit] External links

- Interactive flash tutorial on the DFT

- Mathematics of the Discrete Fourier Transform by Julius O. Smith III

- Fast implementation of the DFT - coded in C and under General Public License (GPL)

- Example of how DFT spectral analysis is used in engineering studies of the Otto Struve 2.1m telescope

|

||||||||||||||