Anti-aliasing

From Wikipedia, the free encyclopedia

| This article includes a list of references or external links, but its sources remain unclear because it lacks inline citations. Please improve this article by introducing more precise citations where appropriate. (January 2009) |

| This article does not cite any references or sources. Please help improve this article by adding citations to reliable sources (ideally, using inline citations). Unsourced material may be challenged and removed. (December 2007) |

In digital signal processing, anti-aliasing is the technique of minimizing the distortion artifacts known as aliasing when representing a high-resolution signal at a lower resolution. Anti-aliasing is used in digital photography, computer graphics, digital audio, and many other applications.

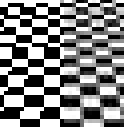

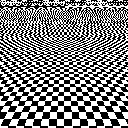

Anti-aliasing means removing signal components that have a higher frequency than is able to be properly resolved by the recording (or sampling) device. This removal is done before (re)sampling at a lower resolution. When sampling is performed without removing this part of the signal, it causes undesirable artifacts such as the black-and-white noise near the top of figure 1-a below.

In signal acquisition and audio, anti-aliasing is often done using an analog anti-aliasing filter to remove the out-of-band component of the input signal prior to sampling with an analog-to-digital converter. In digital photography, optical anti-aliasing filters are made of birefringent materials, and smooth the signal in the spatial optical domain. The anti-aliasing filter essentially blurs the image slightly in order to reduce resolution to below the limit of the digital sensor (the larger the pixel pitch, the lower the achievable resolution at the sensor level).

See the articles on signal processing and aliasing for more information about the theoretical justifications for anti-aliasing. The remainder of this article is dedicated to anti-aliasing methods in computer graphics.

Contents |

[edit] Examples

|

|

|

|

|

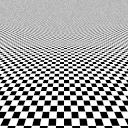

Figure 1-a illustrates the visual distortion that occurs when anti-aliasing is not used. Notice that near the top of the image, where the checkerboard is very distant, the image is impossible to recognize, and is not aesthetically appealing. By contrast, figure 1-b is anti-aliased. The checkerboard near the top blends into gray, which is usually the desired effect when the resolution is insufficient to show the detail. Even near the bottom of the image, the edges appear much smoother in the anti-aliased image. Figure 1-c shows another anti-aliasing algorithm, based on the sinc filter, which is considered better than the algorithm used in 1-b. Figure 2 shows magnified portions of Figure 1 for comparison. The left half of the image is taken from Figure 1-a, and the right half of the image is taken from Figure 1-c. Observe that the gray pixels help make 1-c much smoother than 1-a, though they are not very attractive at the scale used in Figure 2.

Fig 3 shows how anti-aliasing smooths the outline. Text is affected in just the same way.

[edit] Signal processing approach to anti-aliasing

In this approach, the ideal image is regarded as a signal, the image displayed on the screen is taken as samples, at each (x,y) pixel position, of a filtered version of the signal. Ideally, we would understand how the human brain would process the original signal, and provide an image on screen that will yield the most similar response by the brain.

The most widely accepted analytic tool for such problems is the Fourier transform. The Fourier transform decomposes our signal into basic waves of different frequencies, and gives us the amplitude of each wave in our signal. The waves are of the form:

-

- cos(2jπx)cos(2kπy)

where j and k are arbitrary non-negative integers. (In fact, there are also waves involving the sine, but for the purpose of this discussion, the cosine will suffice; see Fourier transform for technical details.)

The numbers j and k together are the frequency of the wave: j is the frequency in the x direction, and k is the frequency in the y direction.

As has been proved in the Nyquist–Shannon sampling theorem, to uniquely specify a signal of not more than n frequencies, you need at least 2n sample points (assuming the inclusion of the sines that we omitted above).

The eye is predominantly sensitive to lower frequencies. And so, in signal processing, we choose to eliminate all high frequencies from the signal, keeping only the frequencies that are low enough to be sampled correctly by our sample rate.

The goal of sharply cutting off frequencies above a certain limit, known as the Nyquist frequency, can not be realized exactly, even with Fourier techniques, so it is always approximated, with many different choices of detailed algorithm. Our knowledge of human visual perception is not sufficient, in general, to say what approach will look best, but in general better approximations to the Fourier ideal tend to look better.

The basic waves need not be cosine waves. See, for instance, wavelets. If one uses basic waves which are not cosine waves, one obtains a slightly different image. Some basic waves yield anti-aliasing algorithms that are not so good. For instance, the Haar wavelet gives the uniform averaging algorithm. However, some wavelets are good, and it is possible that some wavelets are better at approximating the functioning of the human brain than the cosine basis.

[edit] Two dimensional considerations

The above assumes that the rectangular mesh sampling is the dominant part of the problem. It should seem odd that the filter usually considered optimal is not rotationally symmetrical, as shown in this first figure. Since eyes can rotate in their sockets, this must have to do with the fact that we are dealing with data sampled on a square lattice and not with a continuous image. This must be the justification for doing signal processing, along each axis, as it is traditionally done on one dimensional data. Lanczos resampling is based on convolution of the data with a discrete representation of the sinc function.

If the resolution is not limited by the rectangular sampling rate of either the source or the target image, then one should ideally use rotationally symmetrical filter or interpolation functions, as though the data were a two dimensional function of continuous x and y. The sinc function of the radius, in the second figure, has too long a tail to make a good filter (it is not even square-integrable). A more appropriate analog to the one-dimensional sinc is the two-dimensional Airy disc amplitude, the 2D Fourier transform of a circular region in 2D frequency space, as opposed to a square region.

One might consider a Gaussian plus enough of its second derivative to flatten the top (in the frequency domain) or sharpen it up (in the spatial domain). This function is shown also. Functions based on the Gaussian function are natural choices, because convolution with a Gaussian gives another Gaussian, whether applied to x and y or to the radius. Another of its properties is that it (similarly to wavelets) is half way between being localized in the configuration (x and y) and in the spectral (j and k) representation. As an interpolation function, a Gaussian alone seems too spread out to preserve the maximum possible detail, which is why the second derivative is added.

As an example, when printing a photographic negative, with plentiful processing capability, on a printer with a hexagonal pattern, there is no reason to use sinc function interpolation. This would treat diagonal lines differently from horizontal and vertical lines, which is like a weak form of aliasing.

[edit] Practical real-time anti-aliasing approximations

There are only a handful of primitives used at the lowest level in a real-time rendering engine (either software or hardware accelerated.) These include "points", "lines" and "triangles". If one is to draw such a primitive in white against a black background, it is possible to design such a primitive to have fuzzy edges, achieving some sort of anti-aliasing. However, this approach has difficulty dealing with adjacent primitives (such as triangles that share an edge.)

To approximate the uniform averaging algorithm, one may use an extra buffer for sub-pixel data. The initial, and least memory-hungry approach, used 16 extra bits per pixel, in a 4×4 grid. If one renders the primitives in a careful order, for instance front-to-back, it is possible to create a reasonable image.

Since this requires that the primitives be in some order, and hence interacts poorly with an application programming interface such as OpenGL, the latest attempts simply have two or more full sub-pixels per pixel, including full color information for each sub-pixel. Some information may be shared between the sub-pixels (such as the Z-buffer.)

[edit] Mipmapping

There is also an approach specialized for texture mapping called mipmapping, which works by creating lower resolution, prefiltered versions of the texture map. When rendering the image, the appropriate resolution mip-map is chosen and hence the texture pixels (texels) are already filtered when they arrive on the screen. Mipmapping is generally combined with various forms of texture filtering in order to improve the final result.

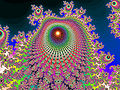

[edit] An example of an image with extreme pseudo-random aliasing

Because fractals have unlimited detail and no noise other than arithmetic roundoff error, they illustrate aliasing more clearly than do photographs or other measured data. The dwells, which are converted to colors at the exact centers of the pixels, go to infinity at the border of the set, so colors from centers near borders are unpredictable, due to aliasing. This example has edge in about half of its pixels, so it shows much aliasing. The first image is uploaded at its original sampling rate. Since most modern software anti-aliases, one may have to download the full size version to see all of the aliasing. The second image is calculated at five times the sampling rate and down-sampled with anti-aliasing. Assuming that we would really like something like the average color over each pixel, this one is getting closer. It is clearly more orderly than the first.

Click each image to see it big enough to get the point.

It happens that, in this case, there is additional information that can be used. By re-calculating with the distance estimator, points were identified that are very close to the edge of the set, so that unusually fine detail is aliased in from the rapidly changing dwell values near the edge of the set. The colors derived from these calculated points have been identified as unusually unrepresentative of their pixels. Those points were replaced, in the third image, by interpolating the points around them. This reduces the noisiness of the image but has the side effect of brightening the colors. So this image is not exactly the same that would be obtained with an even larger set of calculated points.

To show what was discarded, the rejected points, bled into a grey background, are shown in the fourth image.

Finally, "Budding Turbines" is so regular that systematic (Moiré) aliasing can clearly be seen near the main "turbine axis" when it is downsized by taking the nearest pixel. The aliasing in the first image appears random because it comes from all levels of detail, below the pixel size. When the lower level aliasing is suppressed, to make the third image and then that is down-sampled once more, without anti-aliasing, to make the fifth image, the order on the scale of the third image appears as systematic aliasing in the fifth image.

The best anti-aliasing and down-sampling method here depends on one's point of view. When fitting the most data into a limited array of pixels, as in the fifth image, sinc function anti-aliasing would seem appropriate. In obtaining the second and third images, the main objective is to filter out aliasing "noise", so a rotationally symmetrical function may be more appropriate.

[edit] Full-scene anti-aliasing

Modern graphics cards usually support some method of full-scene anti-aliasing (FSAA) to help avoid aliasing (or "jaggies") on full-screen images. The resulting image may seem softer, and should also appear more realistic. One tried and true method of avoiding or removing aliasing artifacts on full-screen images is supersampling.

However, while useful for photo-like images, a simple anti-aliasing approach (such as supersampling and then averaging) may actually worsen the appearance of some types of line art or diagrams (making the image appear fuzzy), especially where most lines are horizontal or vertical. In these cases, a prior grid-fitting step may be useful (see hinting).

In general, supersampling is a technique of collecting data points at a greater resolution (usually by a power of two) than the final data resolution. These data points are then combined (down-sampled) to the desired resolution, often just by a simple average. The combined data points have less visible aliasing artifacts (or moiré patterns).

Full-scene anti-aliasing by supersampling usually means that each full frame is rendered at double (2x) or quadruple (4x) the display resolution, and then down-sampled to match the display resolution. So a 4x FSAA would render 16 supersampled pixels for each single pixel of each frame.

More often than not, FSAA is implemented in hardware in such a way that a graphical application is unaware the images are being supersampled and then down-sampled before being displayed.

[edit] Object-based anti-aliasing

A graphics rendering system creates an image based on objects constructed of polygonal primitives whereby the aliasing effects in the image are reduced by applying an anti-aliasing scheme only to the areas of the image representing silhouette edges of the objects. The silhouette edges are anti-aliased by creating anti-aliasing primitives which vary in opacity. These anti-aliasing primitives are joined to the silhouetted edges, and create a region in the image where the objects appear to blend into the background. The method has some important advantages over the classical methods based on the accumulation buffer since it generates full-scene anti-aliasing in only two passes and does not require the use of the additional memory required by the accumulation buffer. Object-based anti-aliasing was first developed at Silicon Graphics for their Indy workstation.

[edit] History

Important early works in the history of anti-aliasing include:

- Freeman, H.. "Computer processing of line drawing images", ACM Computing Surveys vol. 6(1), March 1974, pp. 57–97.

- Crow, Franklin C.. "The aliasing problem in computer-generated shaded images", Communications of the ACM, vol. 20(11), November 1977, pp. 799–805.

- Catmull, Edwin. "A hidden-surface algorithm with anti-aliasing", Proceedings of the 5th annual conference on Computer graphics and interactive techniques, p.6–11, August 23–25, 1978.

[edit] See also

- Supersampling, a method of antialiasing

- Statistical sampling

- Temporal anti-aliasing

- Anisotropic filtering, another method for improving image quality by enhancing textures

- Measure theory

- Font rasterization

- Color theory for certain physical details pertinent to color images

- Reconstruction filter

- Quincunx (pattern used for anti-aliasing)

- Subpixel rendering, an application of anti-aliasing using the properties of a color LCD screen

- Xiaolin Wu's line algorithm fast real-time anti-aliasing

- Multisample anti-aliasing

- Jaggies, the informal name for aliasing artifacts in raster images

- Saffron Type System, an anti-aliased text-rendering engine

[edit] External links

- Antialiasing and Transparency Tutorial: Explains interaction between antialiasing and transparency, especially when dealing with web graphics

- Interpolation and Gamma Correction In most real-world systems, gamma correction is required to linearize the response curve of the sensor and display systems. If this is not taken into account, the resultant non-linear distortion will defeat the purpose of anti-aliasing calculations based on the assumption of a linear system response.

- (French) Le rôle du filtre anti-aliasing dans les APN (the function of anti-aliasing filter in dSLR)